I finally finished watching the BBC documentary series Can’t Get You Out of My Head by Adam Curtis. It is hard to describe this series which is cut entirely from archival footage with Curtis’ voice interpreting and linking the diverse clips. The subtitle is “An Emotional History of the Modern World” which is true in that the clips are often strangely affecting, but doesn’t convey the broad social-political connections Curtis makes in the narration. He is trying out a set of theses about recent history in China, the US, the UK, and Russia leading up to Brexit and Trump. I’m still digesting the 6 part series, but here are some of the threads of theses:

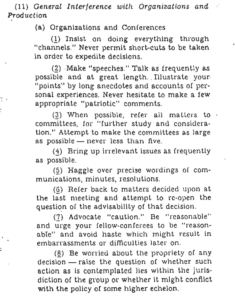

- Conspiracies. He traces our fascination and now belief in conspiracies back to a memo by Jim Garrison in 1967 about the JFK assassination. The memo, Time and Propinquity: Factors in Phase I presents results of an investigative technique built on finding patterns of linkages between fragments of information. When you find strange coincidences you then weave a story (conspiracy) to join them rather than starting with a theory and checking the facts. This reminds me of what software like Palantir does – it makes (often coincidental) connections easy to find so you can tell stories. Curtis later follows the evolution of conspiracies as a political force leading to liberal conspiracies about Trump (that he was a Russian agent) and alt-right conspiracies like Q-Anon. We are all willing to surrender our independence of thought for the joys of conspiracies.

- Big Data Surveillance and AI. Curtis connects this new mode of investigation to what the big data platforms like Google now do with AI. They gather lots of fragments of information about us and then a) use it to train AIs, and b) sell inferences drawn from the data to advertisers while keeping us anxious through the promotion of emotional content. Big data can deal with the complexity of the world which we have given up on trying to control. It promises to manage the complexity of fragments by finding patterns in them. This reminds me of discussions around the End of Theory and shift from theories to correlations.

- Psychology. Curtis also connects this to emerging psychological theories about how our minds may be fragmented with different unconscious urges moving us. Psychology then offers ways to figure out what people really want and to nudge or prime them. This is what Cambridge Analytica promised – the ability to offer services we believed due to conspiracy theories. Curtis argues at the end that behavioural psychology can’t replicate many of the experiments undergirding nudging. Curtis suggests that all this big data manipulation doesn’t work though the platforms can heighten our anxiety and emotional stress. A particularly disturbing part of the last part is the discussion of how the US developed “enhanced” torture techniques based on these ideas after 9/11 to create “learned helplessness” in prisoners. The idea was to fragment their consciousness so that they would release a flood of these fragments, some of which might be useful intelligence.

- Individualism. A major theme is the rise of individualism since the war and how individuals are controlled. China’s social credit model of explicit control through surveillance is contrasted to the Western consumer driven platform surveillance control. Either way, Curtis’ conclusion seems to be that we need to regain confidence in our own individual powers to choose our future and strive for it. We need to stop letting others control us with fear or distract us with consumption. We need to choose our future.

In some ways the series is a plea for everyone to make up their own stories from their fragmentary experience. The series starts with this quote,

The ultimate hidden truth of the world is that it is something we make, and could just as easily make differently. (David Graeber)

Of course, Curtis’ series could just be a conspiracy story that he wove out of the fragments he found in the BBC archives.