> You talk to yourself.

Hi I’m Rameau. You probably don’t know me, but since you’re reading this I’m going to assume you do indeed exist.

> You [ask why] i’m wondering what consciousness is.

I have no idea. But it’s fun to think about.Is this a person? No, because it’s conscious. Is it alive? No, because it doesn’t respond to external stimuli.

> You [ask] why does a person have to respond to external stimuli.

Because then we can interact with it!!!!!But isn’t consciousness an internal phenomenon? Then how would the person interact with anything else? Oh wait, that’s right. We’re not limited by our perception of reality.

I’ve been trying Latitude’s text generation game AI Dungeon. The game is built on Open AI’s GPT-3. You can see a bit of conversation I had with it in a cyberpunk world.

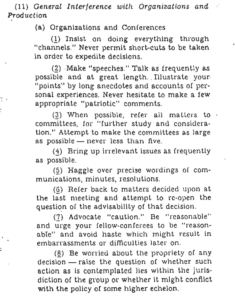

Latitude has gotten into trouble with Open AI because it seems that the game was generating erotic content featuring children. A number of people turned to AI Dungeon precisely because it could be used to explore adult themes, and that would seem to be a good thing, but then some may have gone too far. See the Wired story It Began as an AI-Fueled Dungeon Game. It Got Much Darker. This raises interesting ethical issues about:

- Why do so many players use it to generate erotic content?

- Who is responsible for the erotic content? Open AI, Latitude, or the players?

- Whether there are ethical reasons to generate erotic content featuring children? Do we forbid people from writing novels like Lolita?

- How to prevent inappropriate content without crippling the AI? Are filters enough?

The problem of AIs generating toxic language is nicely shown by this web page on Evaluating Neural Toxic Degeneration in Language Models. The interactives and graphs on the page let you see how toxic language can be generated by many of the popular language generation AIs. The problem seems to be the data sets used to train the machines like those that include scrapes of Reddit.

This exploratory tool illustrates research reported on in a paper titled RealToxicityPrompts: Evaluating Neural Toxic Degeneration in Language Models. You can see a neat visualization of the connected papers here.