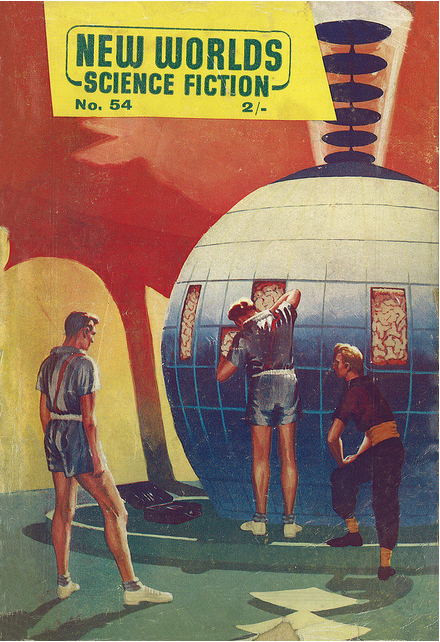

Gerard Quinn’s cover for the December 1956 issue of New Worlds

Thanks to Ali I cam across this compilation of Adventures in Science Fiction Cover Art: Disembodied Brains. Joachim Boaz has assembled a number of pulp sci-fi cover art showing giant brains. The giant brain was often the way computing was imagined. In fact early computers were called giant brains.

Disembodied brains — in large metal womb-like containers, floating in space or levitating in the air (you know, implying PSYCHIC POWER), pulsating in glass chambers, planets with brain-like undulations, pasted in the sky (GOD!, surprise) above the Garden of Eden replete with mechanical contrivances among the flowers and butterflies and naked people… The possibilities are endless, and more often than not, taken in rather absurd directions.

I wonder if we can plot some of the early beliefs about computers through these images and stories of giant brains. What did we think the brain/mind was such that a big one would have exaggerated powers? The equation would go something like this:

- A brain is the seat of intelligence

- The bigger the brain, the more intelligent

- In big brains we might see emergent properties (like telepathy)

- Scaling up the brain will give us artificially effective intelligence

This is what science fiction does so well – it takes some aspect of current science or culture and scales it up to imagine the consequences. Scaling brains, however, seems a bit literal, but the imagined futures are nonetheless important.