Isabel Pedersen is giving the Stéfan Sinclair lecture at Concordia on Create Me, Break Me, Remember Me: Art and AI in the Age of Reinvention. Among other things she talked about the project Fabric of Digital Life which documents over 5000 augmentation projects/tools/platforms. This is a fascinating database.

Category: Science Fiction

Dario Amodei: Machines of Loving Grace

Dario Amodei of Anthropic fame has published a long essay on AI titled Machines of Loving Grace: How AI Could Transform the World for Better. In the essay he talks about how he doesn’t like the term AGI and prefers to instead talk about “powerful AI” and he provides a set of characteristics he considers important, including the ability to work on issues in sustained fashion over time.

Amodei also doesn’t worry much about the Singularity as he believes powerful AI will still have to deal with real world problems when designing more powerful AI like building physical systems. I tend to agree.

The point of the essay is, however, to focus on five categories of positive applications of AI that are possible:

- Biology and physical health

- Neuroscience and mental health

- Economic development and poverty

- Peace and governance

- Work and meaning

The essay is long, so I won’t go into detail. What is important is that he articulates a set of positive goals that AI could help with in these categories. He calls his vision both radical and obvious. In a sense he is right – we have stopped trying to imagine a better world through technology, whether out of cynicism or attention only to details.

Throughout writing this essay I noticed an interesting tension. In one sense the vision laid out here is extremely radical: it is not what almost anyone expects to happen in the next decade, and will likely strike many as an absurd fantasy. Some may not even consider it desirable; it embodies values and political choices that not everyone will agree with. But at the same time there is something blindingly obvious—something overdetermined—about it, as if many different attempts to envision a good world inevitably lead roughly here.

We Might Be in a Simulation. How Much Should That Worry Us?

We may not be able to prove that we are in a simulation, but at the very least, it will be a possibility that we can’t rule out. But it could be more than that. Chalmers argues that if we’re in a simulation, there’d be no reason to think it’s the only simulation; in the same way that lots of different computers today are running Microsoft Excel, lots of different machines might be running an instance of the simulation. If that was the case, simulated worlds would vastly outnumber non-sim worlds — meaning that, just as a matter of statistics, it would be not just possible that our world is one of the many simulations but likely.

The New York Times has a fun opinion piece to the effect that We Might Be in a Simulation. How Much Should That Worry Us? This follows on Nick Bostrom’s essay Are you living in a computer simulation? that argues that either advanced posthuman civilizations don’t run lots of simulations of the past or we are in one.

The opinion is partly a review of a recent book by David Chalmers, Reality+: Virtual Worlds and the Problems of Philosophy (which I haven’t read.) Chalmers thinks there is a good chance we are in a simulation, and if so, there are probably others.

I am also reminded of Hervé Le Tellier’s novel The Anomaly where a plane full of people pops out of the clouds for the second time creating an anomaly where there are two instances of each person on the plane. This is taken as a glitch that may indicate that we are in a simulation raising all sorts of questions about whether there are actually anomalies that might be indications that this really is a simulation or a complicated idea in God’s mind (think Bishop Berkeley’s idealism.)

For me the challenge is the complexity of the world I experience. I can’t help thinking that a posthuman society modelling things really doesn’t need such a rich world as I experience. For that matter, would there really be enough computing to do it? Is this simulation fantasy just a virtual reality version of the singularity hypothesis prompted by the new VR technologies coming on stream?

AI Dungeon

AI Dungeon, an infinitely generated text adventure powered by deep learning.

Robert told me about AI Dungeon, a text adventure system that uses GPT-2, a language model from OpenAI that got a lot of attention when it was “released” in 2019. OpenAI felt it was too good to release openly as it could be misused. Instead they released a toy version. Now they have GPT-3, about which I wrote before.

AI Dungeon allows you to choose the type of world you want to play in (fantasy, zombies …). It then generates an infinite game by basically generating responses to your input. I assume there is some memory as it repeats my name and the basic setting.

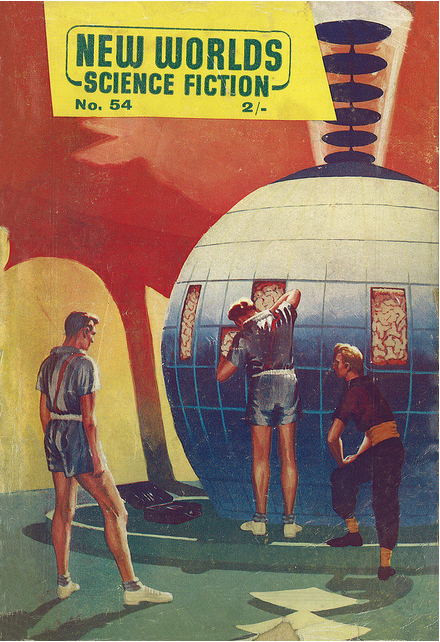

Adventures in Science Fiction Cover Art: Disembodied Brains, Part I | Science Fiction and Other Suspect Ruminations

Gerard Quinn’s cover for the December 1956 issue of New Worlds

Thanks to Ali I cam across this compilation of Adventures in Science Fiction Cover Art: Disembodied Brains. Joachim Boaz has assembled a number of pulp sci-fi cover art showing giant brains. The giant brain was often the way computing was imagined. In fact early computers were called giant brains.

Disembodied brains — in large metal womb-like containers, floating in space or levitating in the air (you know, implying PSYCHIC POWER), pulsating in glass chambers, planets with brain-like undulations, pasted in the sky (GOD!, surprise) above the Garden of Eden replete with mechanical contrivances among the flowers and butterflies and naked people… The possibilities are endless, and more often than not, taken in rather absurd directions.

I wonder if we can plot some of the early beliefs about computers through these images and stories of giant brains. What did we think the brain/mind was such that a big one would have exaggerated powers? The equation would go something like this:

- A brain is the seat of intelligence

- The bigger the brain, the more intelligent

- In big brains we might see emergent properties (like telepathy)

- Scaling up the brain will give us artificially effective intelligence

This is what science fiction does so well – it takes some aspect of current science or culture and scales it up to imagine the consequences. Scaling brains, however, seems a bit literal, but the imagined futures are nonetheless important.

How Science Fiction Imagined the 2020s

What ‘Blade Runner,’ cyberpunk, and Octavia Butler had to say about the age we’re entering now

2020 is not just any year, but because it is shorthand for perfect vision, it is a date that people liked to imagine in the past. OneZero, a Medium publication has a nice story on How Science Fiction Imagined the 2020s (Jan. 17, 2020). The article looks at stories like Blade Runner (1982) that predicted what these years would be like. How accurate were they? Did they get the spirit of this age right? The author, Tim Maugham, reflects on why do many stories of the 1980s and early 1990s seemed to be concerned with many of the same issues that concern us now. He seems a similar concern with inequality and book/bust economies. He also sees sci-fi writers like Octavia Butler paying attention back then to climate change.

It was also the era when climate change started to make the news for the first time, and while it didn’t find its way into the public consciousness quickly enough, it certainly seemed to have grabbed the interest of science fiction writers.

Slaughterbots

On the Humanist discussion list John Keating recommended the short video Slaughterbots that presents a plausible scenario where autonomous drones are used to target dissent using social media data. Watch it! It is well done and presents real issues in a credible short video.

While the short is really about autonomous weapons and the need to ban them, I note that one of ideas included is that dissent could be silenced by using social media to target people. The scenario imagines that university students who shared a dissenting video on social media have their data harvested (including images of their faces) and the drones target them using face recognition. Science fiction, but suggestive of how social media presence can be used for control.

Of Course Citizens Should Be Allowed to Kick Robots | WIRED

Seen in the wild, robots often appear cute and nonthreatening. This doesn’t mean we shouldn’t be hostile.

Wired magazine has a nice short piece that suggests, Of Course Citizens Should Be Allowed to Kick Robots. In some ways the point of the essay is not how to treat robots, but whether we should tolerate these surveillance robots on our sidewalks.

Blanket no-punching policies are useless in a world full of terrible people with even worse ideas. That’s even more true in a world where robots now do those people’s bidding. Robots, like people, are not all the same. While K5 can’t threaten bodily harm, the data it collects can cause real problems, and the social position it puts us all in—ever suspicious, ever watched, ever worried about what might be seen—is just as scary. At the very least, it’s a relationship we should question, not blithely accept as K5 rolls by.

The question, “Is it OK to kick a robot?” is a good one that nicely brings the ethics of human-robot co-existence down to earth and onto our side walks. What sort of respect do the proliferation of robots and scooters deserve? How should we treat these stupid things when enter our everyday spaces?

The Real Threat of Artificial Intelligence – The New York Times

It’s not robot overlords. It’s economic inequality and a new global order.

Kai-Fu Lee has written a short and smart speculation on the effects of AI, The Real Threat of Artificial Intelligence . To summarize his argument:

- AI is not going to take over the world the way the sci-fi stories have it.

- The effect will be on tasks as AI takes over tasks that people are paid to do, putting them out of work.

- How then will we deal with the unemployed? (This is a question people asked in the 1960s when the first wave computerization threatened massive unemployment.)

- One solution is “Keynesian policies of increased government spending” paid for taxing the companies made wealthy by AI. This spending would pay for “service jobs of love” where people act as the “human interface” to all sorts of services.

- Those in the jobs that can’t be automated and that make lots of money might also scale back on their time at work so as to provide more jobs of this sort.

Continue reading The Real Threat of Artificial Intelligence – The New York Times

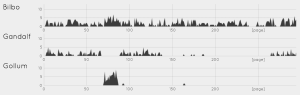

LOTRProject: Visualizing the Lord of the Rings

Emil Johansson, a student in Gothenburg, has created a fabulous site called the LOTRProject (or Lord Of The Rings Project. The site provides different types of visualizations about Tolkien’s world (Silmarillion, Hobbit, and LOTR) from maps to family trees to character mentions (see image above).

Continue reading LOTRProject: Visualizing the Lord of the Rings