The Scholarly Publishing and Academic Resources Coalition (SPARC) are drawing attention to how we need to be Addressing the Alarming Systems of Surveillance Built By Library Vendors. This was triggered by a story in The Intercept that LexisNexis (is) to provide (a) giant database of personal information to ICE.

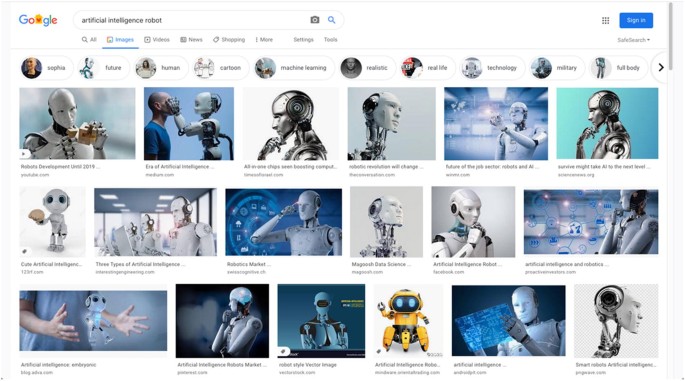

The company’s databases offer an oceanic computerized view of a person’s existence; by consolidating records of where you’ve lived, where you’ve worked, what you’ve purchased, your debts, run-ins with the law, family members, driving history, and thousands of other types of breadcrumbs, even people particularly diligent about their privacy can be identified and tracked through this sort of digital mosaic. LexisNexis has gone even further than merely aggregating all this data: The company claims it holds 283 million distinct individual dossiers of 99.99% accuracy tied to “LexIDs,” unique identification codes that make pulling all the material collected about a person that much easier. For an undocumented immigrant in the United States, the hazard of such a database is clear. (The Intercept)

That LexisNexis has been building databases on people isn’t new. Sarah Brayne has a book about predictive policing titled Predict and Surveil where, among other things, she describes how the LAPD use Palantir and how police databases integrated in Palantir are enhanced by commercial databases like those sold by LexisNexis. (There is an essay that is an excerpt of the book here, Enter the Dragnet.)

I suspect environments like Palantir make all sorts of smaller and specialized databases more commercially valuable which is leading what were library database providers to expand their business. Before, a database about repossessions might be of interest to only a specialized community. Now it becomes linked to other information and is another dimension of data. In particular these databases provide information about all the people who aren’t in police databases. They provide the breadcrumbs needed to surveil those not documented elsewhere.

The SPARC call points out that we (academics, university libraries) have been funding these database providers.

Dollars from library subscriptions, directly or indirectly, now support these systems of surveillance. This should be deeply concerning to the library community and to the millions of faculty and students who use their products each day and further underscores the urgency of privacy protections as library services—and research and education more generally—are now delivered primarily online.

This raises the question of our complicity and whether we could do without some of these companies. At a deeper level it raises questions about the curiosity of the academy. We are dedicated to knowledge as an unalloyed good and are at the heart of a large system of surveillance – surveillance of the past, of literature, of nature, of the cosmos, and of ourselves.