I’m republishing here a blog essay originally in Italian that Domenico Fiormonte posted on Infolet that is worth reading,

Why basing universities on digital platforms will lead to their demise

By Domenico Fiormonte

(All links removed. They can be found in the original post – English Translation by Desmond Schmidt)

A group of professors from Italian universities have written an open letter on the consequences of using proprietary digital platforms in distance learning. They hope that a discussion on the future of education will begin as soon as possible and that the investments discussed in recent weeks will be used to create a public digital infrastructure for schools and universities.

Dear colleagues and students,

as you already know, since the COVID-19 emergency began, Italian schools and universities have relied on proprietary platforms and tools for distance learning (including exams), which are mostly produced by the “GAFAM” group of companies (Google, Apple, Facebook, Microsoft and Amazon). There are a few exceptions, such as the Politecnico di Torino, which has adopted instead its own custom-built solutions. However, on July 16, 2020 the European Court of Justice issued a very important ruling, which essentially says that US companies do not guarantee user privacy in accordance with the European General Data Protection Regulation (GDPR). As a result, all data transfers from the EU to the United States must be regarded as non-compliant with this regulation, and are therefore illegal.

A debate on this issue is currently underway in the EU, and the European Authority has explicitly invited “institutions, offices, agencies and organizations of the European Union to avoid transfers of personal data to the United States for new procedures or when securing new contracts with service providers.” In fact the Irish Authority has explicitly banned the transfer of Facebook user data to the United States. Finally, some studies underline how the majority of commercial platforms used during the “educational emergency” (primarily G-Suite) pose serious legal problems and represent a “systematic violation of the principles of transparency.”

In this difficult situation, various organizations, including (as stated below) some university professors, are trying to help Italian schools and universities comply with the ruling. They do so in the interests not only of the institutions themselves, but also of teachers and students, who have the right to study, teach and discuss without being surveilled, profiled and catalogued. The inherent risks in outsourcing teaching to multinational companies, who can do as they please with our data, are not only cultural or economic, but also legal: anyone, in this situation, could complain to the privacy authority to the detriment of the institution for which they are working.

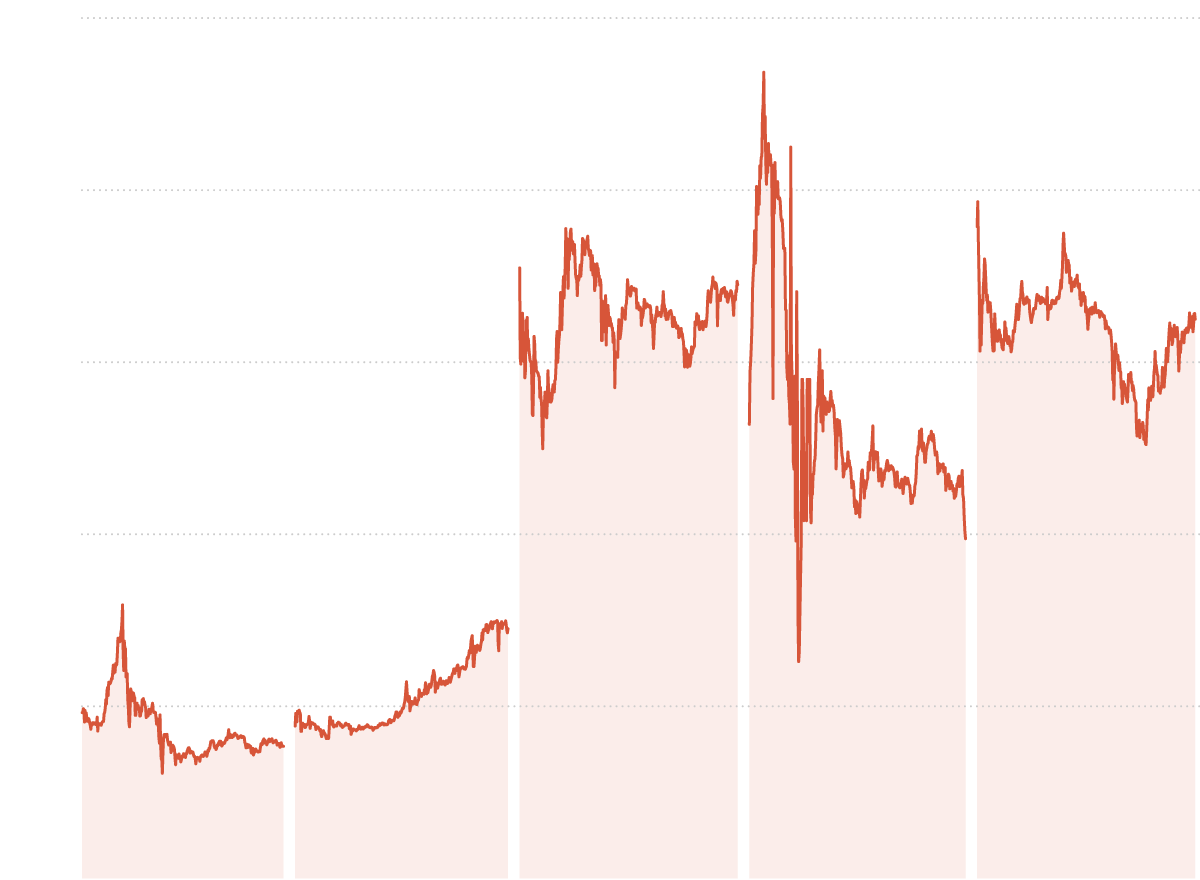

However, the question goes beyond our own right, or that of our students, to privacy. In the renewed COVID emergency we know that there are enormous economic interests at stake, and the digital platforms, which in recent months have increased their turnover (see the study published in October by Mediobanca), now have the power to shape the future of education around the world. An example is what is happening in Italian schools with the national “Smart Class” project, financed with EU funds by the Ministry of Education. This is a package of “integrated teaching” where Pearson contributes the content for all the subjects, Google provides the software, and the hardware is the Acer Chromebook. (Incidentally, Pearson is the second largest publisher in the world, with a turnover of more than 4.5 billion euros in 2018.) And for the schools that join, it is not possible to buy other products.

Finally, although it may seem like science fiction, in addition to stabilizing proprietary distance learning as an “offer”, there is already talk of using artificial intelligence to “support” teachers in their work.

For all these reasons, a group of professors from various Italian universities decided to take action. Our initiative is not currently aimed at presenting an immediate complaint to the data protection officer, but at avoiding it, by allowing teachers and students to create spaces for discussion and encourage them to make choices that combine their freedom of teaching with their right to study. Only if the institutional response is insufficient or absent, we will register, as a last resort, a complaint to the national privacy authority. In this case the first step will be to exploit the “flaw” opened by the EU court ruling to push the Italian privacy authority to intervene (indeed, the former President, Antonello Soro, had already done so, but received no response). The purpose of these actions is certainly not to “block” the platforms that provide distance learning and those who use them, but to push the government to finally invest in the creation of a public infrastructure based on free software for scientific communication and teaching (on the model of what is proposed here and

which is already a reality for example in France, Spain and other European countries).

As we said above, before appealing to the national authority, a preliminary stage is necessary. Everyone must write to the data protection officer (DPO) requesting some information (attached here is the facsimile of the form for teachers we have prepared). If no response is received within thirty days, or if the response is considered unsatisfactory, we can proceed with the complaint to the national authority. At that point, the conversation will change, because the complaint to the national authority can be made not only by individuals, but also by groups or associations. It is important to emphasize that, even in this avoidable scenario, the question to the data controller is not necessarily a “protest” against the institution, but an attempt to turn it into a better working and study environment for everyone, conforming to European standards.