It was another black mark on the privacy record of the social network, which also reported its quarterly earnings.

The New York Times has a story on how Facebook to Pay $550 Million to Settle Facial Recognition Suit (Natasha Singer and Mike Isaac, Jan. 29, 2020.) The Illinois case has to do with Facebook’s face recognition technology that was part of Tag Suggestions that would suggest names for people in photos. Apparently in Illinois it is illegal to harvest biometric data without consent. The Biometric Information Privacy Act (BIPA) passed in 2008 “guards against the unlawful collection and storing of biometric information.” (Wikipedia entry)

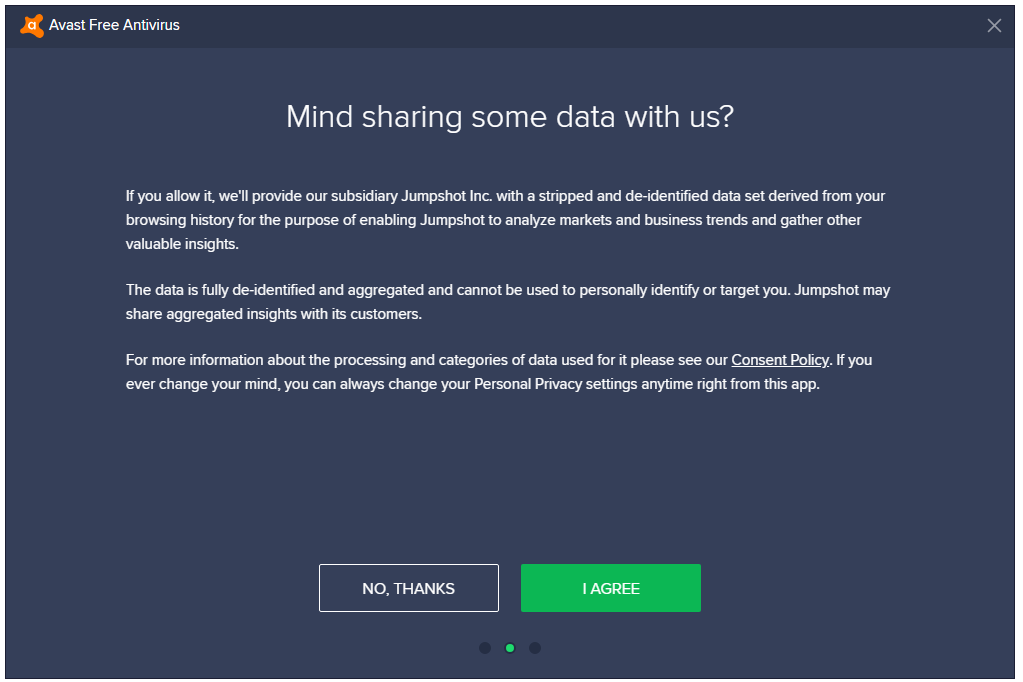

BIPA suggests a possible answer to the question of what is unethical about face recognition. While I realize that a law is not ethics (and vice versa) BIPA hints at one of the ways we can try to unpack the ethics of face recognition. The position suggested by BIPA would go something like this:

- Face recognition is dependent on biometric data which is extracted from an image or in other form of scan.

- To collect and store biometric data one needs the consent of the person whose data is collected.

- The data has to be securely stored.

- The data has to be destroyed in a timely manner.

- If there is consent, secure storage, and timely deletion of the data, then the system/service can be said to not be unethical.

There are a number of interesting points to be made about this position. First, it is not the gathering, storing and providing access to images of people that is at issue. Face recognition is an ethical issue because biometric data about a person is being extracted, stored and used. Thus Google Image Search is not an issue as they are storing data about whole images while FB stores information about the face of individual people (along with associated information.)

This raises issues about the nature of biometric data. What is the line between a portrait (image) and biometric information? Would gathering biographical data about a person become biometric at some point if it contained descriptions of their person?

Second, my reading is that a service like Clearview AI could also be sued if they scrape images of people in Illinois and extract biometric data. This could provide an answer to the question of what is ethically wrong about the Clearview AI service. (See my previous blog entry on this.)

Third, I think there is a missing further condition that should be specified, names that the company gathering the biometric data should identify the purpose for which they are gathering it when seeking consent and limit their use of the data to the identified uses. When they no longer need the data for the identified use, they should destroy it. This is essentially part of the PIPA principle of Limiting Use, Disclosure and Retention. It is assumed that if one is to delete data in a timely fashion there will be some usage criteria that determine timeliness, but that isn’t always the case. Sometimes it is just the passage of time.

Of course, the value of data mining is often in the unanticipated uses of data like biometric data. Unanticipated uses are, by definition, not uses that were identified when seeking consent, unless the consent was so broad as to be meaningless.

No doubt more issues will occur to me.