An experimental open-source attempt to make GPT-4 fully autonomous. – Auto-GPT/README.md at master · Torantulino/Auto-GPT

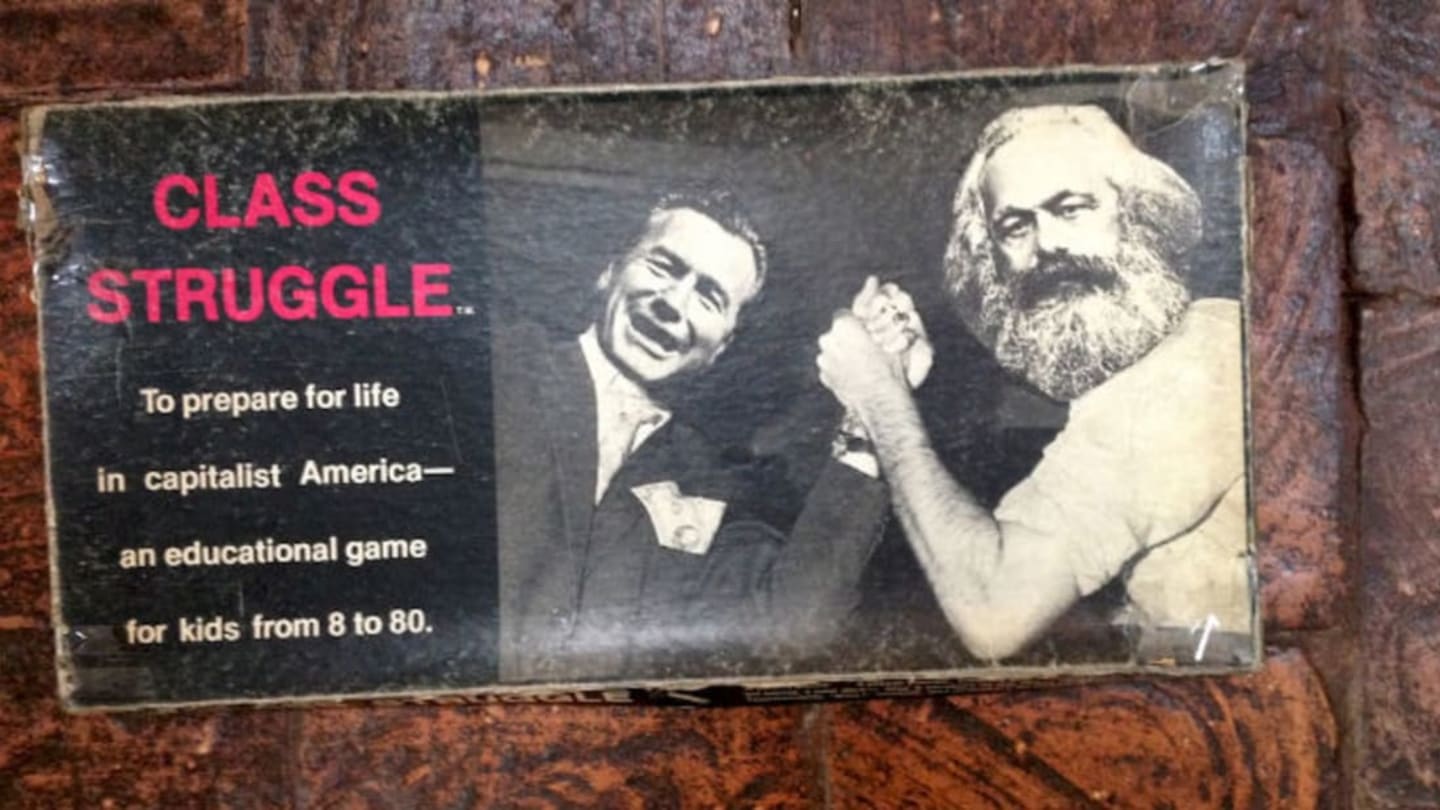

From a video on 3 Quarks Daily on whether ChatGPT can prompt itself I discovered, Auto-GPT. Auto-GPT is powered by GPT-4. You can describe a mission and it will try to launch tasks, assess them, and complete the mission. Needless to say it was inevitable that someone would find a way to use ChatGPT or one of its relatives to try to complete complicated jobs including taking over the world, as Chaos-GPT claims to want to do (using Auto-GPT.)

How long will it be before someone figures out how to use these tools to do something truly nasty? I give it about 6 months before we get stories of generative AI being used to systematically harass people, or find information on how to harm people, or find ways to waste resources like the paperclip maximizer. Is it surprising that governments like Italy have banned ChatGPT?