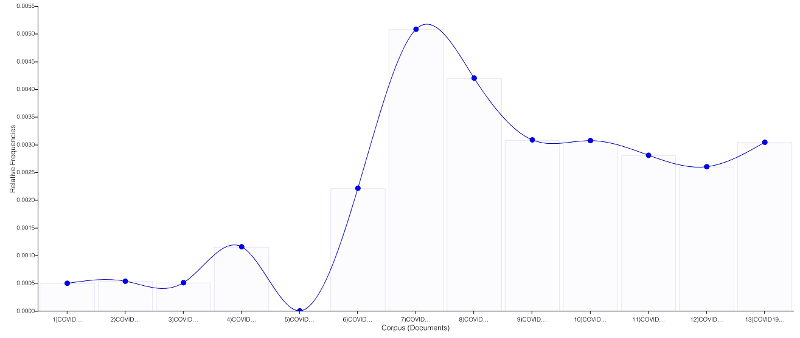

Within a few days of the announcement that libraries, schools and colleges across the nation would be closing due to the COVID-19 global pandemic, we launched the temporary National Emergency Library to provide books to support emergency remote teaching, research activities, independent scholarship, and intellectual stimulation during the closures. […]

According to the Internet Archive blog the Temporary National Emergency Library to close 2 weeks early, returning to traditional controlled digital lending. The National Emergency Library (NEL) was open to anyone in the world during a time when physical libraries were closed. It made books the IA had digitized available to read online. It was supposed to close at the end of June because four commercial publishers decided to sue.

The blog entry points to what the HathiTrust is doing as part of their Emergency Temporary Access Service which lets libraries that are members (and the U of Alberta Library is one) provide access to digital copies of books they have corresponding physical copies of. This is only available to “member libraries that have experienced unexpected or involuntary, temporary disruption to normal operations, requiring it to be closed to the public”.

It is a pity the IS NEL was discontinued, for a moment there it looked like large public service digital libraries might become normal. Instead it looks like we will have a mix of commercial e-book services and Controlled Digital Lending (CDL) offered by libraries that have the physical books and the digital resources to organize it. The IA blog entry goes on to note that even CDL is under attack. Here is a story from Plagiarism Today:

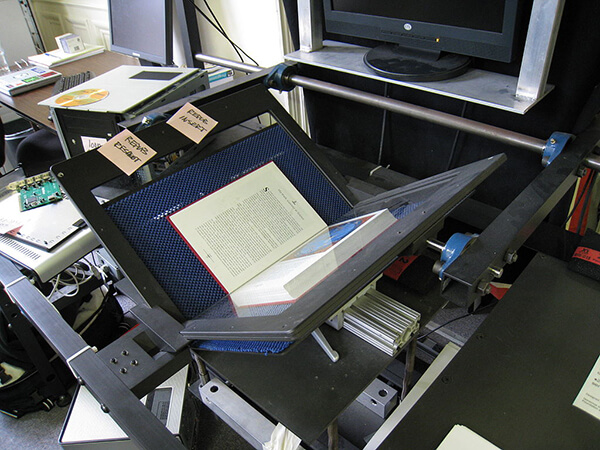

Though the National Emergency Library may have been what provoked the lawsuit, the complaint itself is much broader. Ultimately, it targets the entirety of the IA’s digital lending practices, including the scanning of physical books to create digital books to lend.

The IA has long held that its practices are covered under the concept of controlled digital lending (CDL). However, as the complaint notes, the idea has not been codified by a court and is, at best, very controversial. According to the complaint, the practice of scanning a physical book for digital lending, even when the number of copies is controlled, is an infringement.