Human data encodes human biases by default. Being aware of this is a good start, and the conversation around how to handle it is ongoing. At Google, we are actively researching unintended bias analysis and mitigation strategies because we are committed to making products that work well for everyone. In this post, we’ll examine a few text embedding models, suggest some tools for evaluating certain forms of bias, and discuss how these issues matter when building applications.

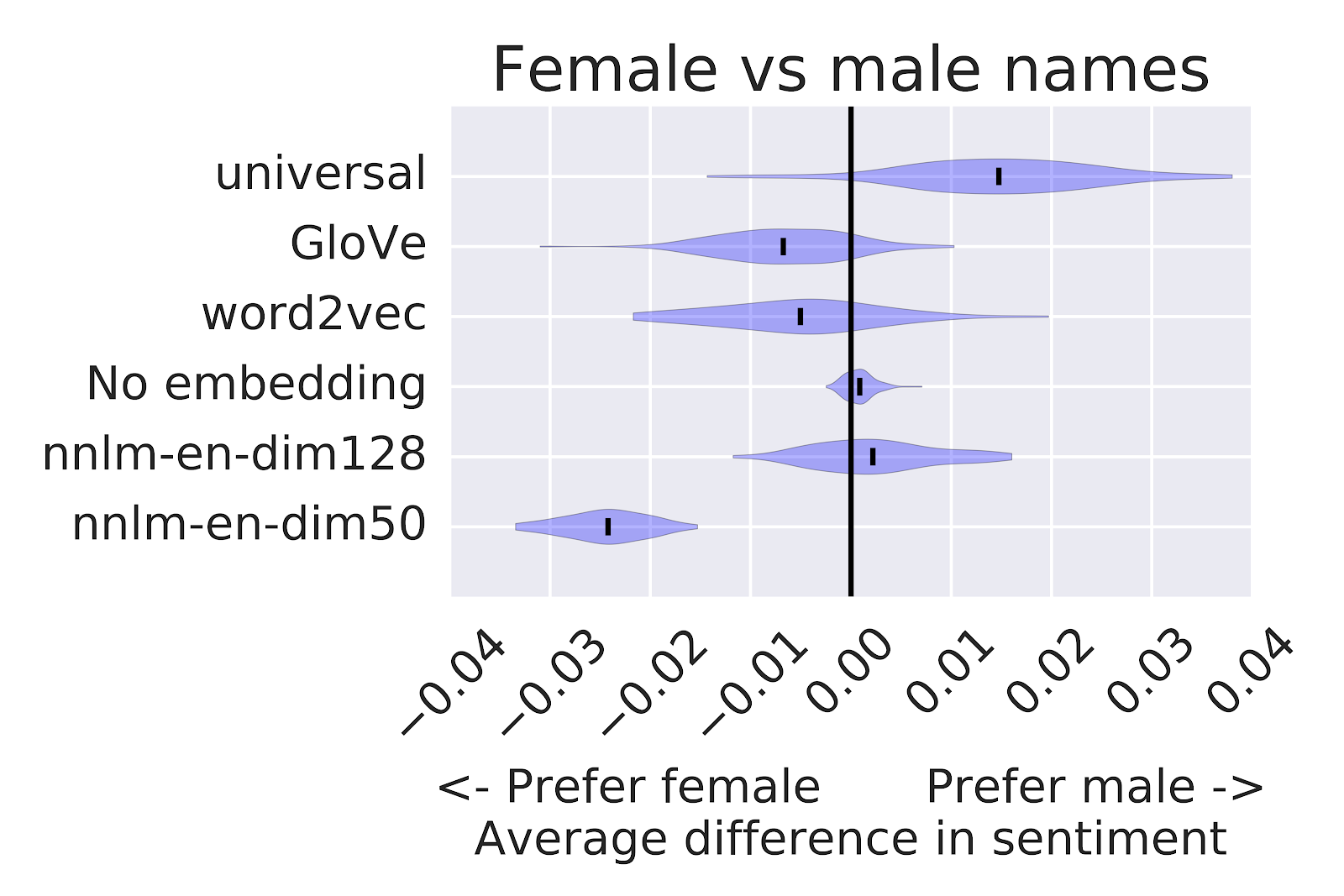

On the Google Developvers Blog there is an interesting post on Text Embedding Models Contain Bias. Here’s Why That Matters. The post talks about a technique for using Word Embedding Association Tests (WEAT) to see compare different text embedding algorithms. The idea is to see whether groups of words like gendered words associate with positive or negative words. In the image above you can see the sentiment bias for female and male names for different techniques.

While Google is working on WEAT to try to detect and deal with bias, in our case this technique could be used to identify forms of bias in corpora.