Apple unveiled new software Thursday that scans photos and messages on iPhones for child pornography and explicit messages sent to minors in a major new effort to prevent sexual predators from using Apple’s services.

The Washington Post and other news venues are reporting that Apple will scan iPhones for child pornography. As the subtitle to the article puts it “Apple is prying into iPhones to find sexual predators, but privacy activists worry governments could weaponize the feature.” Child porn is the go-to case when organizations want to defend surveillance.

The software will scan without our knowledge or consent which raises privacy issues. What are the chances of false positives? What if the tool is adapted to catch other types of images? Edward Snowden and the EFF have criticized this move. It seems inconsistent with Apple’s firm position on privacy and refusal to even unlock

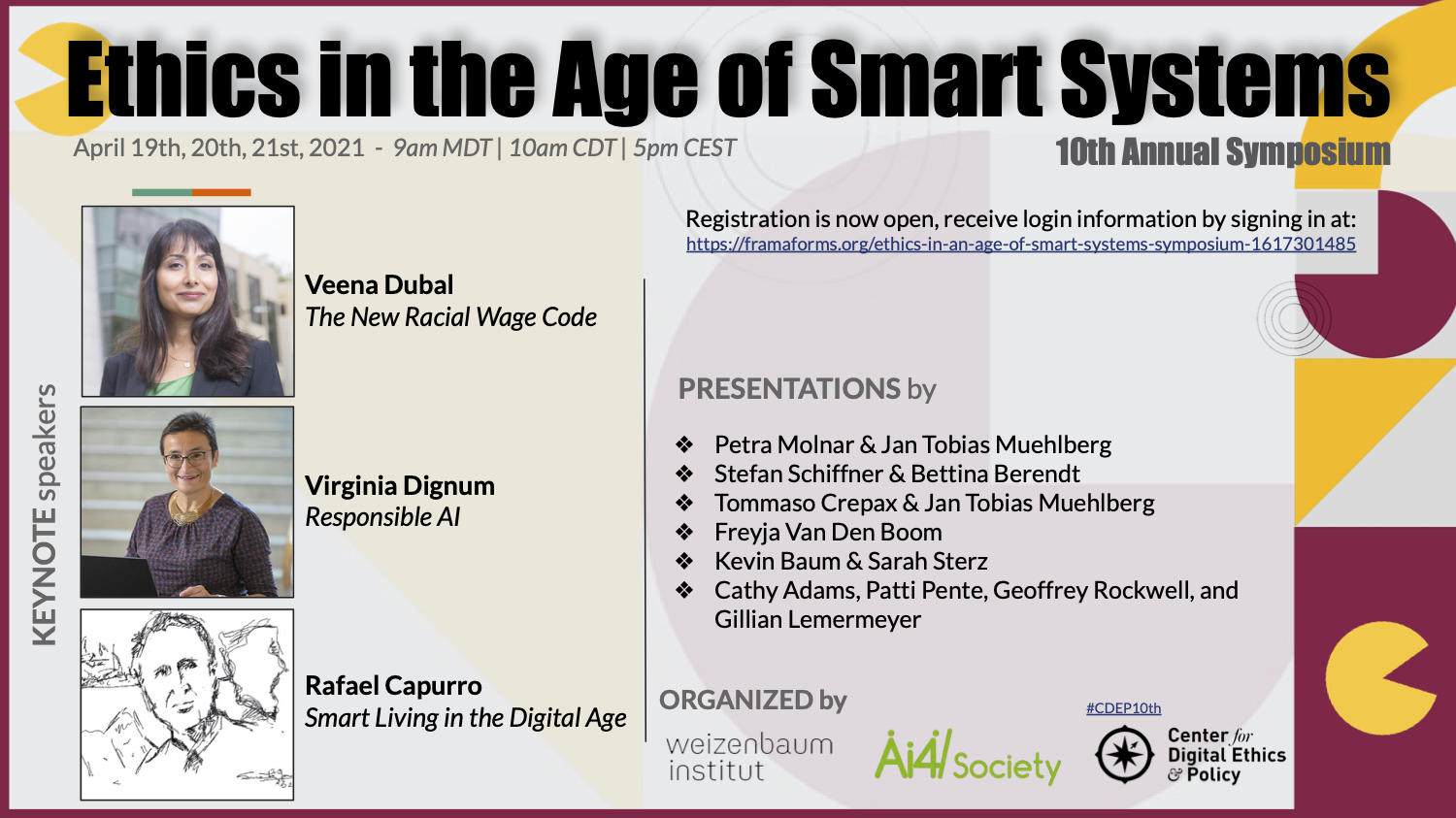

It strikes me that there is a great case study here.