The law has not caught up. In the United States, the use of facial recognition is almost wholly unregulated.

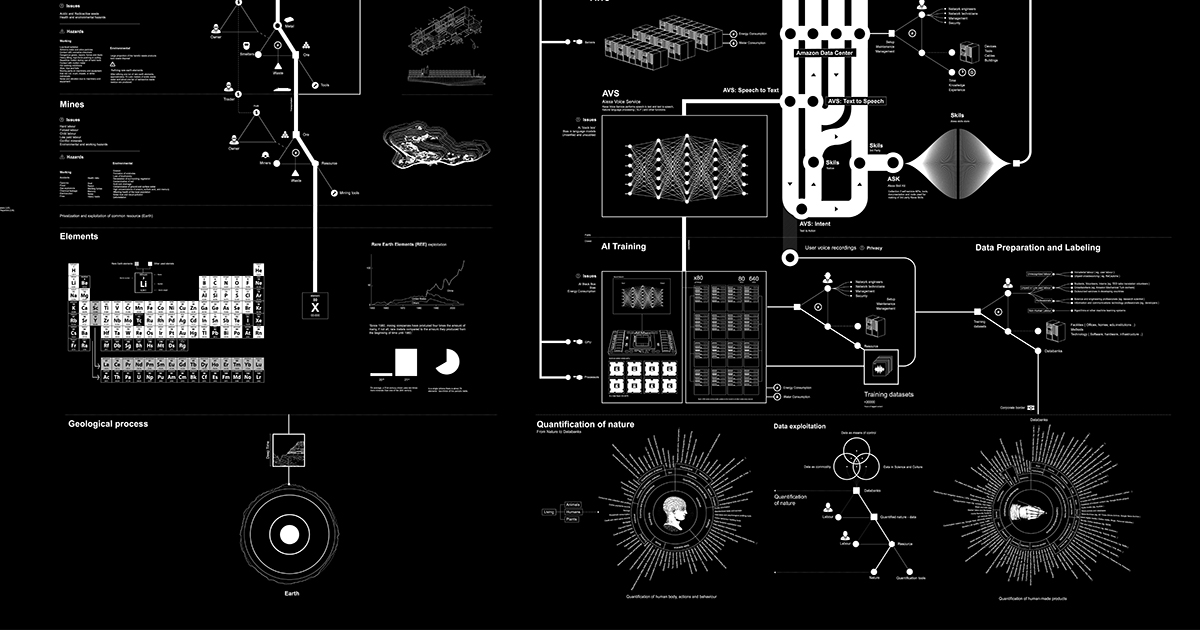

The New York Times has an opinion piece by Sahil Chinoy on how (they) We Built a (Legal) Facial Recognition Machine for $60. They describe an inexpensive experiment they ran where they took footage of people walking past some cameras installed in Bryant Park and compared them to known people who work in the area (scraped from web sites of organizations that have offices in the neighborhood.) Everything they did used public resources that others could use. The cameras stream their footage here. Anyone can scrape the images. The image database they gathered came from public web sites. The software is a service (Amazon’s Rekognition?) The article asks us to imagine the resources available to law enforcement.

I’m intrigued by how this experiment by the New York Times. It is a form of design thinking where they have designed something to help us understand the implications of a technology rather than just writing about what others say. Or we could say it is a form of journalistic experimentation.

Why does facial recognition spook us? Is recognizing people something we feel is deeply human? Or is it the potential for recognition in all sorts of situations. Do we need to start guarding our faces?

Facial recognition is categorically different from other forms of surveillance, Mr. Hartzog said, and uniquely dangerous. Faces are hard to hide and can be observed from far away, unlike a fingerprint. Name and face databases of law-abiding citizens, like driver’s license records, already exist. And for the most part, facial recognition surveillance can be set up using cameras already on the streets.

This is one of a number of excellent articles by the New York Times that is part of their Privacy Project.