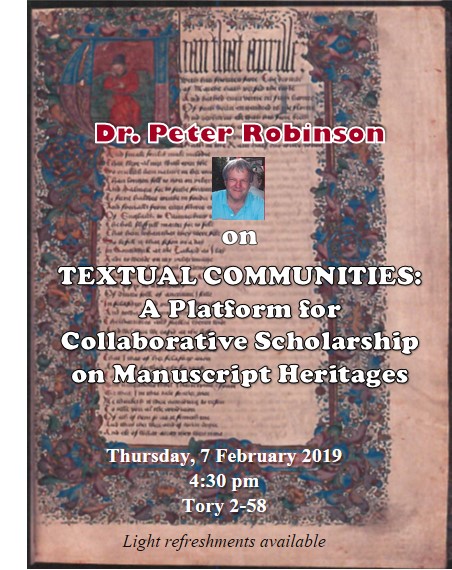

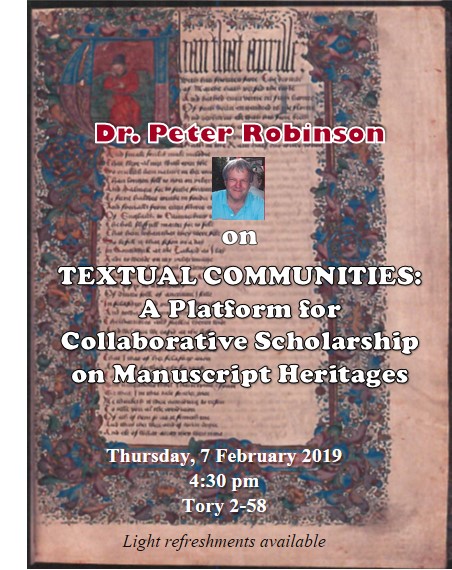

Peter Robinson gave a talk on “Textual Communities: A Platform for Collaborative Scholarship on Manuscript Heritages” as part of the Singhmar Guest Speaker Program | Faculty of Arts.

He started by talking about whether textual traditions had any relationship to the material world. How do texts relate to each other?

Today stemata as visualizations are models that go beyond the manuscripts themselves to propose evolutionary hypotheses in visual form.

He then showed what he is doing with the Canterbury Tales Project and then talked about the challenges adapting the time-consuming transcription process to other manuscripts. There are lots of different transcription systems, but few that handle collation. There is also the problem of costs and involving a distributed network of people.

He then defined text:

A text is an act of (human) communication that is inscribed in a document.

I wondered how he would deal with Allen Renear’s argument that there are Real Abstract Objects which, like Platonic Forms are real, but have no material instance. When we talk, for example, of “hamlet” we aren’t talking about a particular instance, but an abstract object. Likewise with things like “justice”, “history,” and “love.” Peter responded that the work doesn’t exist except as its instances.

He also mentioned that this is why stand-off markup doesn’t work because texts aren’t a set of linear objects. It is better to represent it as a tree of leaves.

So, he launched Textual Communities – https://textualcommunities.org/

This is a distributed editing system that also has collation.