After Axon announced plans for a Taser-equipped drone that it said could prevent mass shootings, nine members of the company’s ethics board stepped down.

Ethics boards can make a difference as a story from The New York Times shows, Axon Pauses Plans for Taser Drone as Ethics Board Members Resign. The problem is that board members had to resign.

The background is that Axon, after the school shootings, announced an early-stage concept for a TASER drone. The idea was to combine two emerging technologies, drones and non-lethal energy weapons. The proposal said they wanted a discussion and laws. “We cannot introduce anything like non-lethal drones into schools without rigorous debate and laws that govern their use.” The proposal went on to discuss CEO Rick Smith’s 3 Laws of Non-Lethal Robotics: A New Approach to Reduce Shootings. The 2021 video of Smith talking about his 3 laws spells out a scenario where a remote (police?) operator could guide a prepositioned drone in a school to incapacitate a threat. The 3 laws are:

- Non-lethal drones should be used to save lives, not take them.

- Humans must own use-of-force decisions and take moral and legal responsibility.

- Agencies must provide rigorous oversight and transparency to ensure acceptable use.

The ethics board, which had reviewed a limited internal proposal and rejected it, then resigned when Axon went ahead with the proposal and issued a statement on Twitter on June 2nd, 2022.

Rick Smith, CEO of Axon soon issued a statement pausing work on the idea. He described the early announcement as intended to start a conversation,

Our announcement was intended to initiate a conversation on this as a potential solution, and it did lead to considerable public discussion that has provided us with a deeper appreciation of the complex and important considerations relating to this matter. I acknowledge that our passion for finding new solutions to stop mass shootings led us to move quickly to share our ideas.

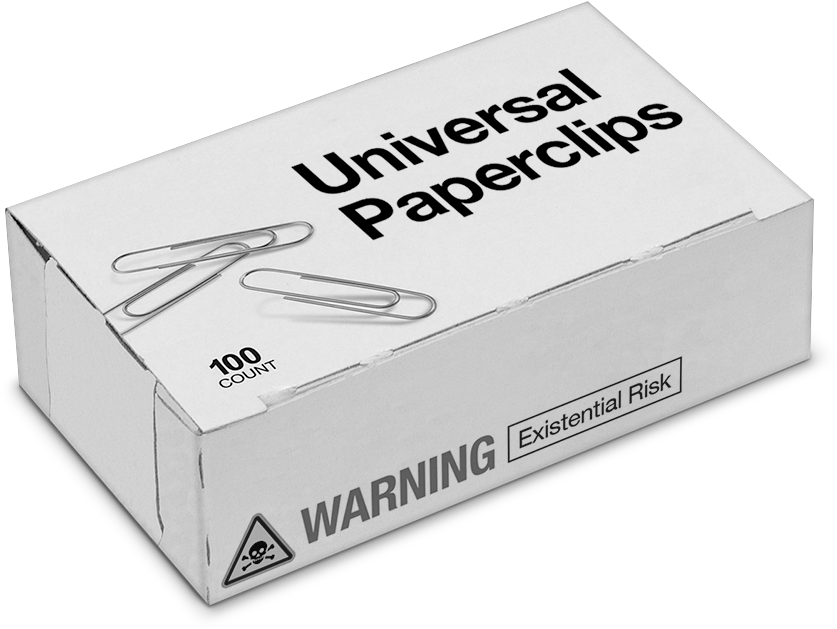

This resignation illustrates a number of points. First, we see Axon struggling with ethics in the face of opportunity. Second, we see an example of an ethics board working, even if it led to resignations. These deliberations are usually hidden. Third, we see differences on the issue of autonomous weapons. Axon wants to get social license for a close alternative to AI-driven drones. They are trying to find an acceptable window for their business. Finally, it is interesting how Smith echoes Asimov’s 3 Laws of Robotics as he tries to reassure us that good system design would mitigate the dangers of experimenting with weaponized drones in our schools.