“every move you make…, every word you say, every game you play…, I’ll be watching you.” (The Police – Every Breath You Take)

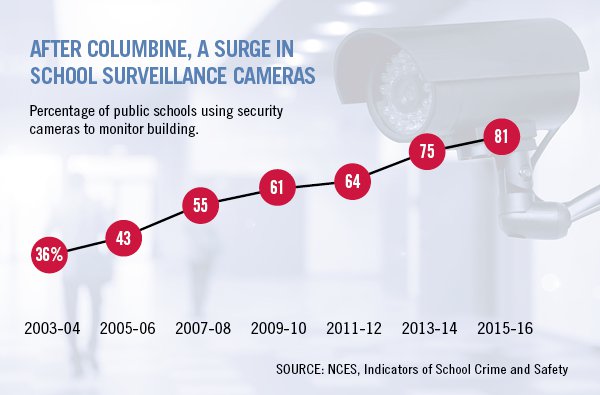

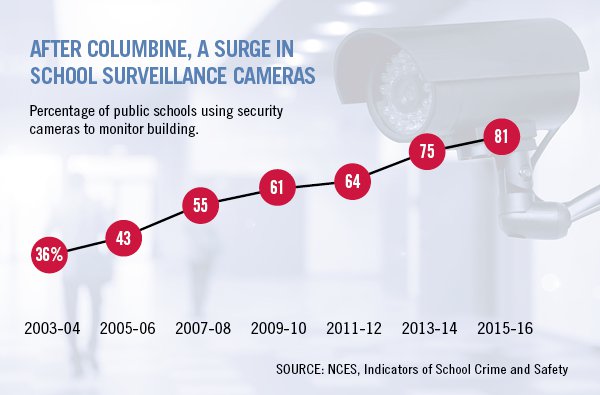

Education Week has an alarming story about how schools are using surveillance, Schools Are Deploying Massive Digital Surveillance Systems. The Results Are Alarming. The story is by Benjamin Harold and dates from May 30, 2019. It talks not only about the deployment of cameras, but the use of companies like Social Sentinel, Securly, and Gaggle that monitor social media or school computers.

Every day, Gaggle monitors the digital content created by nearly 5 million U.S. K-12 students. That includes all their files, messages, and class assignments created and stored using school-issued devices and accounts.

The company’s machine-learning algorithms automatically scan all that information, looking for keywords and other clues that might indicate something bad is about to happen. Human employees at Gaggle review the most serious alerts before deciding whether to notify school district officials responsible for some combination of safety, technology, and student services. Typically, those administrators then decide on a case-by-case basis whether to inform principals or other building-level staff members.

The story provides details that run from the serious to the absurd. It mentions concerns by the ACLU that such surveillance can desensitize children to surveillance and make it normal. The ACLU story makes a connection with laws that forbid federal agencies from studying or sharing data that could make the case for gun control. This creates a situation where the obvious ways to stop gun violence in schools aren’t studied so surveillance companies step in with solutions.

Needless to say, surveillance has its own potential harms beyond desensitization. The ACLU story lists the following potential harms:

- Suppression of students’ intellectual freedom, because students will not want to investigate unpopular or verboten subjects if the focus of their research might be revealed.

- Suppression of students’ freedom of speech, because students will not feel at ease engaging in private conversations they do not want revealed to the world at large.

- Suppression of students’ freedom of association, because surveillance can reveal a students’ social contacts and the groups a student engages with, including groups a student might wish to keep private, like LGBTQ organizations or those promoting locally unpopular political views or candidates.

- Undermining students’ expectation of privacy, which occurs when they know their movements, communications, and associations are being watched and scrutinized.

- False identification of students as safety threats, which exposes them to a range of physical, emotional, and psychological harms.

As with the massive investment in surveillance for national security and counter terrorism purposes, we need to ask whether the cost of these systems, both financial and other, is worth it. Unfortunately, protecting children, like protecting from terrorism is hard to put a price on which makes it hard to argue against such investments.