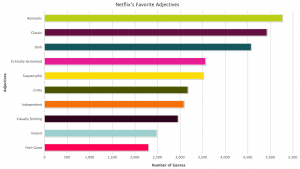

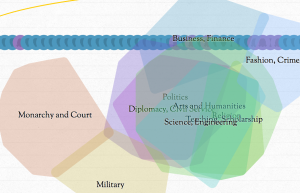

Alexis C. Madrigal has a fine article in The Atlantic on How Netflix Reverse Engineered Hollywood (Jan. 2, 2014). The article moves from an interesting problem about Netflix’s micro-genres, to text analysis of results of a scrape, to reverse engineering the Netflix algorithm, to creating a genre generator (at the top of the article) and then to an interview with the Netflix VP of Product who was responsible for the tagging system. It is a lovely example of thinking through something and using technology when needed. The text analysis isn’t the point, it is a tool to use in understanding the 76,897 micro-genres uncovered. (Think about it … Netflix has over 70,000 genres of movies and TV shows, some with no actual movies or shows as examples of the micro-genre.)

Madrigal goes on to talk about the procedure Netflix uses to create genres and use them in recommending shows. It turns out to be a combination of content analysis (actual humans watching a movie/show and ranking it in various ways) and automatic methods that combine tags. This combination of human and machine methods is also the process Madrigal describes for his own pursuit of Netflix genres. It is another sense of humanities computing – those procedures that involve both human and algorithmic interventions.

The post ends with an anomaly that illustrates the hybridity of procedure. It turns out the most named actor is Raymond Burr of Perry Mason. Netflix has a larger number of altgenres with Raymond Burr than anyone else. Why would he rank so high in micro-genres? Madrigal tries a theory as to why this is that is refuted by the VP Yellin, but Yellin can’t explain the anomaly either. As Madrigal points out, in Perry Mason shows the mystery is always resolved by the end, but in the case of the mystery of Raymond Burr in genre, there is no revealing bit of evidence that helps us understand how he rose in the ranks.

On the other hand, no one — not even Yellin — is quite sure why there are so many altgenres that feature Raymond Burr and Barbara Hale. It’s inexplicable with human logic. It’s just something that happened.

I tried on a bunch of different names for the Perry Mason thing: ghost, gremlin, not-quite-a-bug. What do you call the something-in-the-code-and-data which led to the existence of these microgenres?

The vexing, remarkable conclusion is that when companies combine human intelligence and machine intelligence, some things happen that we cannot understand.

“Let me get philosophical for a minute. In a human world, life is made interesting by serendipity,” Yellin told me. “The more complexity you add to a machine world, you’re adding serendipity that you couldn’t imagine. Perry Mason is going to happen. These ghosts in the machine are always going to be a by-product of the complexity. And sometimes we call it a bug and sometimes we call it a feature.”

Perhaps this serendipity is what is original in the hybrid procedures involving human practices and algorithms? For some these anomalies are the false positives that disrupt big data’s certainty, for others they are the other insight that emerges from the mixing of human and computer processes. As Madrigal concludes:

Perry Mason episodes were famous for the reveal, the pivotal moment in a trial when Mason would reveal the crucial piece of evidence that makes it all makes sense and wins the day.

Now, reality gets coded into data for the machines, and then decoded back into descriptions for humans. Along the way, humans ability to understand what’s happening gets thinned out. When we go looking for answers and causes, we rarely find that aha! evidence or have the Perry Mason moment. Because it all doesn’t actually make sense.

Netflix may have solved the mystery of what to watch next, but that generated its own smaller mysteries.

And sometimes we call that a bug and sometimes we call it a feature.