D-Lib Magazine has a report on next steps for high performance computing (or as they call it in the UK, “e-science”) and the humanities, Next Steps for E-Science, the Textual Humanities and VREs. The report summarizes four presentations on what is next. Some quotes and reactions,

The crucial point they made was that digital libraries are far more than simple digital surrogates of existing conventional libraries. They are, or at least have the potential to be, complex Virtual Research Environments (VREs), but researchers and e-infrastructure providers in the humanities lack the resources to realize this full potential.

I would call this the cyberinfrastructure step, but I’m not sure it will be libraries that lead. Nor am I sure about the “virtual” in research environments. Space matters and real space is so much more high-bandwidth than the virtual. In fact, subsequent papers made something like this point about the shape of the environment to come.

Loretta Auvil form the NCSA is summarized to the effect that Software Environment for the Advancement of Scholarly Research (SEASR) is,

API-driven approach enables analyses run by text mining tools, such as NoraVis (http://www.noraproject.org/description.php) and Featurelens (http://www.cs.umd.edu/hcil/textvis/featurelens/) to be published to web services. This is critical: a VRE that is based on digital library infrastructure will have to include not just text, but software tools that allow users to analyse, retrieve (elements of) and search those texts in ever more sophisticated ways. This requires formal, documented and sharable workflows, and mirrors needs identified in the hard science communities, which are being met by initiatives such as the myExperiment project (http://www.myexperiment.org). A key priority of this project is to implement formal, yet sharable, workflows across different research domains.

While I agree, of course, on the need for tools, I’m not sure it follows that this “requires” us to be able to share workflows. Our data from TAPoR is that it is the simple environment, TAPoRware, that is being used most, not the portal, though simple tools may be a way in to VREs. I’m guessing that the idea of workflows is more of a hypothesis of what will enable the rapid development of domain specific research utilities (where a utility does a task of the domain, while a tool does something more primitive.) Workflows could turn out to be perceived of as domain-specific composite tools rather than flows just as most “primitive” tools have some flow within them. What may happen is that libraries and centres hire programmers to develop workflows for particular teams in consultation with researchers for specific resources, and this is the promise of SEASR. When it crosses the Rubicon of reality it will provide support units a powerful way to rapidly deploying sophisticated research environments. But if it is programmers who do this, will they want a flow model application development environment or default back to something familiar like Java. (What is the research on the success of visual programming environments?)

Boncheva is reported as presenting the Generic Architecture for Text Engineering (GATE).

A key theme of the workshop was the well documented need researchers have to be able to annotate the texts upon which they are working: this is crucial to the research process. The Semantic Annotation Factory Environment (SAFE) by GATE will help annotators, language engineers and curators to deal with the (often tedious) work of SA, as it adds information extraction tools and other means to the annotation environment that make at least parts of the annotation process work automatically. This is known as a ‘factory’, as it will not completely substitute the manual annotation process, but rather complement it with the work of robots that help with the information extraction.

The alternative to the tool model of what humanists need is the annotation environment. John Bradley has been pursuing a version of this with Pliny. It is premised on the view that humanists want to closely markup, annotate, and manipulate smaller collections of texts as they read. Tools have a place, but within a reading environment. GATE is doing something a little different – they are trying to semi-automate linguistic annotation, but their tools could be used in a more exploratory environment.

What I like about this report is we see the three complementary and achievable visions of the next steps in digital humanities:

- The development of cyberinfrastructure building on the library, but also digital humanities centres.

- The development of application development frameworks that can create domain-specific interfaces for research that takes advantage of large-scale resources.

- The development of reading and annotation tools that work with and enhance electronic texts.

I think there is fourth agenda item we need to consider, which is how we will enable reflection on and preservation of the work of the last 40 years. Willard McCarty has asked how we will write the history of humanities computing and I don’t think he means a list of people and dates. I think he means how we will develop from a start-up and unreflective culture to one that one that tries to understand itself in change. That means we need to start documenting and preserving what Julia Flanders has called the craft projects of the first generations which prepared the way for these large scale visions.

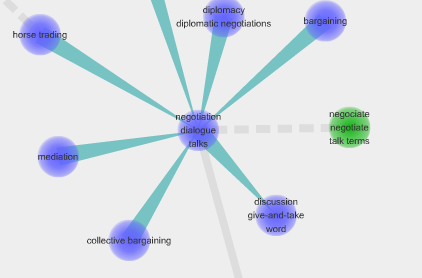

Visuwords online graphical dictionary and thesaurus

Visuwords online graphical dictionary and thesaurus

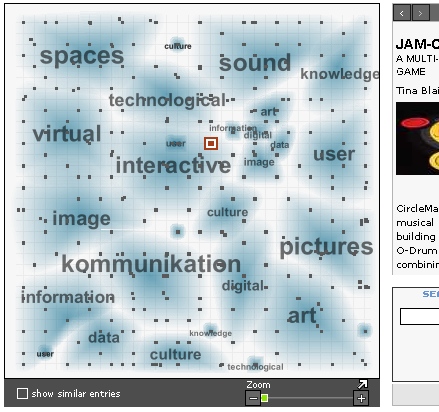

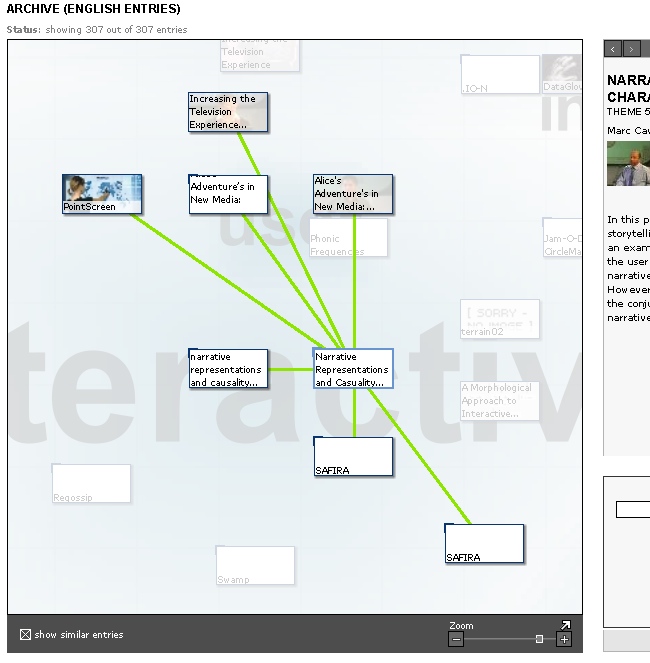

With colleagues Stéfan Sinclair, Alexandre Sevigny and Susan Brown, I recently got a SSHRC Research and Development Initiative grant for a project Mashing Texts. This project will look at “mashing” open tools to test ideas for text research environments. Here is

With colleagues Stéfan Sinclair, Alexandre Sevigny and Susan Brown, I recently got a SSHRC Research and Development Initiative grant for a project Mashing Texts. This project will look at “mashing” open tools to test ideas for text research environments. Here is