“We’re in this era of measurement but we don’t know what we should be measuring,” said Ryan Fuller, former vice president for workplace intelligence at Microsoft.

The New York Times has essay on Workplace Productivity: Are You Being Tracked? The neat thing is that the article tracks your reading of it to give you a taste of the sorts of tracking now being deployed for remote (and on site) workers. If you pause and don’t scroll it puts up messages like “Hey are you still there? You’ve been inactive for 32 seconds.”

But Ms. Kraemer, like many of her colleagues, found that WorkSmart upended ideas she had taken for granted: that she would have more freedom in her home than at an office; that her M.B.A. and experience had earned her more say over her time.

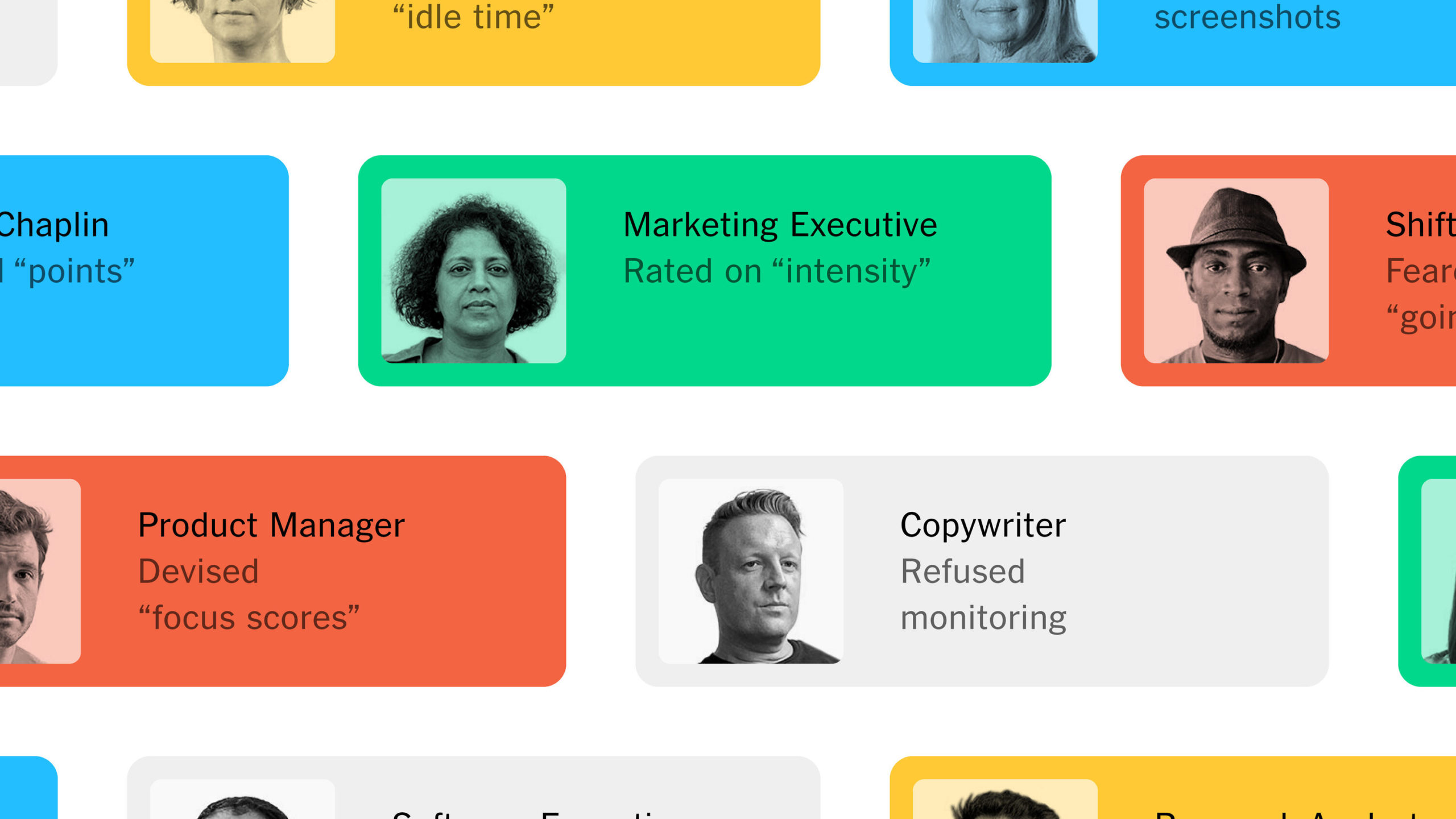

What is new is the shift to remote work due to Covid. Many companies are fine with remote work if they can guarantee productivity. The other thing that is changing is the use of tracking for not just manual work, but also for white-collar work.

I’ve noticed that this goes hand in hand with self-tracking. My Apple Watch/iPhone offer a weekly summary of my browsing. It also offers to track my physical activity. If I go for a walk, somewhere close to a kilometer it asks if I want this tracked as exercise.

The questions raised by the authors of the New York Time article include Whether we are tracking the right things? What are we losing with all this tracking? What is happening to all this data? Can companies sell the data about employees?

The article is by Jodi Kantor and Arya Sundaram. It is produced by Aliza Aufrichtig and Rumsey Taylor. Aug. 14, 2022