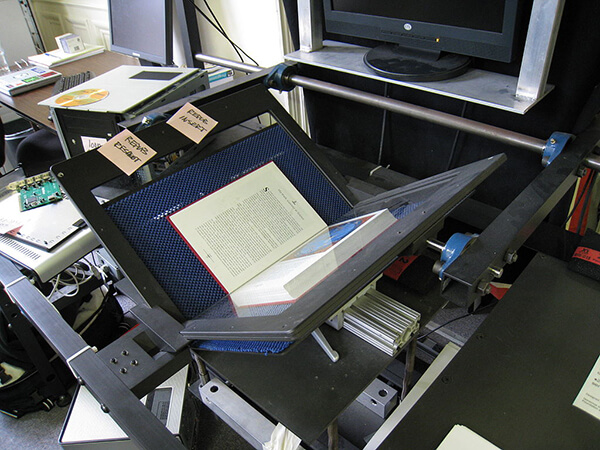

In response to unprecedented exigencies, more systemic solutions may be necessary and fully justifiable under fair use and fair dealing. This includes variants of controlled digital lending (CDL), in which books are scanned and lent in digital form, preserving the same one-to-one scarcity and time limits that would apply to lending their physical copies. Even before the new coronavirus, a growing number of libraries have implemented CDL for select physical collections.

The Association of Research Libraries has a blog entry on Digitization in an Emergency: Fair Use/Fair Dealing and How Libraries Are Adapting to the Pandemic by Ryan Clough (April 1, 2020) with good links. The closing of the physical libraries has accelerated a process of moving from a hybrid of physical and digital resources to an entirely digital library. Controlled digital lending (where only a limited number of patrons can read an digital asset at a time) seems a sensible way to go.

To be honest, I am so tired of sitting on my butt that I plan to spend much more time walking to and browsing around the library at the University of Alberta. As much as digital access is a convenience, I’m missing the occasions for getting outside and walking that a library affords. Perhaps we should think of the library as a labyrinth – something deliberately difficult to navigate in order to give you an excuse to walk around.

Perhaps I need a book scanner on a standing desk at home to keep me on my feet.