Reacting to the Past is the name of a set of games designed to get students thinking about historical moments. Students play out the games that are set in the past and use texts to inform their play. The instructor then just facilitates the class and grades their work. It reminds me of role playing events like the Model United Nations, but with history and ideas being modeled. Now I have to find a workshop to go to to learn more because the materials are behind a password.

Sample on Randomness

Mark Sample has posted his gem of a MLA paper on An Account of Randomness in Literary Computing. I wish I could write papers quite so clear and evocative. He combines interesting historical examples to a question that crosses all sorts of disciplines – that of randomness. He shows how the importance of randomness connects to poetic experiments in computing.

I would recommend reading the article immediately, but I discovered, as with many good works, I ended up spending a lot of time following up the links and reading stuff on sites like the MIT 150 Exhibition which has a section on Analog/Digital MIT with online exhibits on subjects like the MIT Project Athena and the TX-0. Instead I will warn – beware of reading interesting things!

Lack of guidelines create ethical dilemmas in social network-based research

e! Science News has a story about an article in Science about how a Lack of guidelines create ethical dilemmas in social network-based research.

The full article by Shapiro and Ossorio, Regulation of Online Social Network Studies can be found in the 11 January, 2013 issue of Science (Vol. 339 no. 6116, pp. 144-45.)

The Internet has been a godsend for all sorts of research as it lets us scrape large amounts of data representing discourse about a subject without having to pay for interviews or other forms of data gathering. It has been a boon for those of us using text analysis or those in computational linguistics. At the same time, much of what we gather can be written by people that we would not be allowed to interview without careful ethics review. Vulnerable people and youth can leave a trail of information on the Internet that we wouldn’t normally be allowed to gather directly without careful protects.

I participated many years ago in symposium on this issue. The case we were considering involved scraping breast cancer survivor blogs. In addition to the issue of the vulnerability of the authors we discussed whether they understood that their blogs were public, or if they considered posting on a blog like talking to a friend in a public space. At the time it seemed that many bloggers didn’t realize how they could be searched, found, and scraped. A final issue discussed was the veracity of the blogs. How would a researcher know they were actually reading a blog by a cancer survivor? How would they know the posts were authentic without being able to question the writer? Like all symposia we left with more questions than answers.

In the end an ethics board authorized the study. (I was on neither the study or the board – just part of a symposium to discuss the issue.)

Digital Humanities Pedagogy: Practices, Principles and Politics

Open Book Publishers has just published Digital Humanities Pedagogy: Practices, Principles and Politics online. Stéfan Sinclair and I have two chapters in the collection, one on “Acculturation and the Digital Humanities Community” and one on “Teaching Computer-Assisted Text Analysis.”

The Acculturation chapter sets out the ways in which we try to train students by involving them in project teams rather than only through courses. This approach I learned watching Jerome McGann and Johanna Drucker at the University of Virginia. My goal has always to be able to create the sort of project culture they did (and now the Scholar’s Lab continues.)

The editor Brett D. Hirsch deserves a lot of credit for gently seeing this through.

MLA 2013 Conference Notes

I’ve just posted my MLA 2013 convention notes on philosophi.ca (my wiki). I participated in a workshop on getting started with DH organized by DHCommons, gave a paper on “thinking through theoretical things”, and participated in a panel on “Open Sesame” (interoperability for literary study.)

The sessions seemed full, even the theory one which started at 7pm! (MLA folk are serious about theorizing.)

At the convention the MLA announced and promoted a new digital MLA Commons. I’ve been poking around and trying to figure out what it will become. They say it is “a developing network linking members of the Modern Language Association.” I’m not sure I need one more venue to link to people, but it could prove an important forum if promoted.

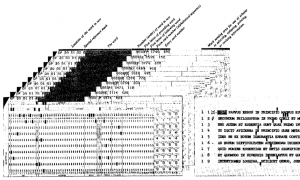

Tasman: Literary Data Processing

I came across a 1957 article by an IBM scientist, P. Tasman on the methods used in Roberto Busa’s Index Thomisticus project, with the title Literary Data Processing (IBM Journal of Research and Development, 1(3): 249-256.) The article, which is in the third issue of the IBM Journal of Research and Development, has an illustration of how they used punch cards for this project.

While the reproduction is poor, you can read the things encoded on the card for each word:

- Location in text

- Special reference mark

- Word

- Number of word in text

- First letter of preceding word

- First letter of following word

- Form card number

- Entry card number

At the end Tasman speculates on how these methods developed on the project could be used in other areas:

Apart from literary analysis, it appears that other areas of documentation such as legal, chemical, medical, scientific, and engineering information are now susceptible to the methods evolved. It is evident, of course, that the transcription of the documents in these other fields necessitates special sets of ground rules and codes in order to provide for information retrieval, and the results will depend entirely upon the degree and refinement of coding and the variety of cross referencing desired.

The indexing and coding techniques developed by this method offer a comparatively fast method of literature searching, and it appears that the machine-searching application may initiate a new era of language engineering. It should certainly lead to improved and more sophisticated techniques for use in libraries, chemical documentation, and abstract preparation, as well as in literary analysis.

Busa’s project may have been more than just the first humanities computing project. It seems to be one of the first projects to use computers in handling textual information and a project that showed the possibilities for searching any sort of literature. I should note that in the issue after the one in which Tasman’s article appears you have an article by H. P. Luhn (developer of the KWIC) on A Statistical Approach to Mechnized Encoding and Searching of Literary Information. (IBM Journal of Research and Development 1(4): 309-317.) Luhn specifically mentions the Tasman article and the concording methods developed as being useful to the larger statistical text mining that he proposes. For IBM researchers Busa’s project was an important first experiment handling unstructured text.

I learned about the Tasman article in a journal paper deposited by Thomas Nelson Winter on Roberto Busa, S.J., and the Invention of the Machine-Generated Concordance. The paper gives an excellent account of Busa’s project and its significance to concording. Well worth the read!

Digital Humanities Talks at the 2013 MLA Convention

The ACH has put together a useful Guide to Digital-Humanities Talks at the 2013 MLA Convention. I will presenting at various events including:

- Get Started in Digital Humanities with Help from DHCommons – an unconference workshop organized by DHCommons. I’ll be showing Voyant and TAPoR 2.0. My script is here: http://hermeneuti.ca/node/250

- I’ll be presenting “Theoretical Things for the Humanities” as part of a session organized by Stefan Franchi on Digital Humanities and Theory.

- I’ll be part of a panel on Open Sesame: Interoperability in Digital Literary Studies. This panel organized by Susan Brown has a stellar list of participants.

GAME THEORY in the NYTimes

Just in time for Christmas, the New York Times has started an interesting ArtsBeat Blog called GAME THEORY. It is interesting that this multi-authored blog is in the “Arts Beat” area as opposed to under the Technology tab where most of the game stories are. Game Theory seems to want to take a broader view of games and culture as the second post on Caring About Make-Believe Body Counts illustrates. This post starts by addressing the other blog columnists (as if this were a dialogue) and then starts with Wayne LaPierre’s speech about how to deal with the Connecticut school killings that blames, among other things, violent games. The column then looks at the discourse around violence in games including voices within the gaming industry that were critical of ultraviolence.

Those familiar with games who debate the medium’s violence now commonly assume that games may have become too violent. But they don’t assume that games should be free of violence. That is because of fake violence’s relationship with interactivity, which is a defining element of video games.

Stephen Totilo ends the column with his list of the best games of 2012 which includes Super Hexagon, Letterpress, Journey, Dys4ia, and Professor Layton and the Miracle Mask.

As I mentioned above, the blog column has a dialogical side with authors addressing each other. It also brings culture and game culture together which reminds me of McLuhan who argued that games reflect society providing a form of catharsis. This column promises to theorize culture through the lens of games rather than just theorize games.

Short Guide To Evaluation Of Digital Work

The Journal of Digital Humanities has republished my Short Guide to Evaluation of Digital Work as part of an issue on Closing the Evaluation Gap (Vol. 1, No. 4). I first wrote the piece for my wiki and you can find the old version here. It is far more useful bundled with the other articles in this issue od JDH.

The JDH is a welcome experiment in peer-reviewed republication. One thing they do is to select content that has been published in other forms (blogs, online essays and so on) and then edit it for recombination in a thematic issue. The JDH builds on the neat Digital Humanities Now that showcases neat stuff on the web. Both are projects of the Roy Rosenzweig Center for History and New Media. The CHNM deserved credit for thinking through what we can do with the openness of the web.

Clay Shirky: Napster, Udacity, and the Academy

Clay Shirky has a good essay on Napster, Udacity, and the Academy on his blog which considers who will be affected by MOOCs. He makes a number of interesting points:

- A number of the changes that Internet has facilitated involved unbundling services that were bundled in other media. He gives the example of individual songs being unbundled from albums, but he could also have mentioned how classifieds have been unbundled from newspapers. Likewise MOOCs (Massive Open Online Courses), like the Introduction to Artificial Intelligence run by Peter Norvig and Sebastian Thrun at Stanford, unbundle the course from the university and certification.

- University lectures are inefficient, a poor way of teaching, and often not of the highest quality. Chances are there are better video lectures online for any established subject than what is offered locally. If universities fall into the trap of saving money by expanding class sizes until higher education is just a series of lectures and exams then we can hardly pretend to higher quality than MOOCs. Why would students in Alberta want to listen to me lecture when they could have someone from Harvard?

- MOOCs are far more likely to threaten the B colleges than the elite liberal arts colleges. A MOOC is not a small seminar experience for a top student and doesn’t compete with the high end. MOOCs compete with lectures (why not have the best lecturer) and other passive learning approaches. MOOCs will threaten the University of Phoenix and other online programs that are not doing such a good job at retention anyway.

- MOOCs are great marketing for the elite universities which is why they may thrive even if there is no financial model or evaluation.

- The openness is important to MOOCs. Shirky gives the example of a Statistics 101 course that was improved by open criticism. By contrast most live courses aren’t open to peer evaluation. Instead they are treated like confidential instructor-patient interactions.

While I agree with much of what Shirky says and I welcome MOOCs I’m not convinced they will have the revolutionary effect some think they will. I remember seeing the empty television frames at Scarborough college from when they thought teleducation was going to be the next thing. When it comes to education we seem to forget over an over that there is a rich history of distance education experiments. Shirky writes, “In the academy, we lecture other people every day about learning from history. Now its our turn…” but I don’t see evidence that he has done any research into the history of education. Instead Shirky adopts a paradigm shift rhetoric comparing MOOCs to Napster in potential for disruption as if that were history. We could just as easily compare them to the explosion of radio experiments between the wars (that disappeared by 1940.) Just how would we learn from history? What history is relevant here? Shirky is unconvincing in his choice of Napster as the relevant lesson.

Another issue I have is epistemological – I just don’t think MOOCs are that different from a how-to book or learning video when it comes to the delivery of knowledge. Anyone who wants to learn something in the West has a wealth of choices and MOOCs, if well designed, are one more welcome choice, but revolutionary they are not. The difficult issues around education don’t have to do with quality resources, but with time (for people trying to learn while holding down a job), motivation (to keep at it), interaction (to learn from mistakes) and certification (to explain what you know to others).

Now its my turn to learn from history. I propose these possible lessons:

- Unbundling will have an effect on the university, especially as costs escalate faster than inflation. We cannot expect society to pay at this escalating rate especially with the cost of health care eating into budgets. Right now what seems to be being unbundled is the professoriate from teaching as more and more teaching is done by sessionals. Do we really want to leave experiments in unbundling exclusively to others or are we willing to take responsibility for experimenting ourselves?

- One form of unbundling that we should experiment with more is unbundling the course from the class. Universities are stuck in the course = 12/3 weeks of classes on campus. These are the easiest way for us to run courses as we have a massive investment in infrastructure, but they aren’t necessarily the most convenient for students or subject matter. For graduate programs especially we should be experimenting with hybrid delivery models.

- Universities may very well end up not being the primary way people get their post-secondary education. Universities may continue as elite institutions leaving it to professional organizations, colleges and distance education institutions to handle the majority of students.

- Someone is going to come up with a reputable certification process for students who want to learn using a mix of books, study groups, MOOCs, college courses and so on. Imagine if a professional organization like the Chartered Accountants of Canada started offering a robust certification process that was independent of any university degree. For a fee you could take a series of tests and practicums that, if passed, would get you provincial certification to practice.

- The audience for MOOCs is global, not local. MOOCs may be a gift we in wealthier countires can give to the educationally limited around the world. Openly assessed MOOCs could supplement local learning and become a standard against which people could compare their own courses. On the other hand we could end up with an Amazon of education where one global entity drives out all but the elite educational institutions (which use it to stay elite.) Will we find ourselves talking about educational security (a national needing their own educational system) and learning local (not taking courses from people that live more than 100K away)?

- We should strive for a wiki model for OOCs where they are not the marketing tools of elite institutions but maintained by the community.

In sum, we should welcome any new idea for learning, including MOOCs. We should welcome OOCs as another way of learning that may suit many. We should try developing OOCs (the M part we can leave to Stanford) and assess them. We should be open to different configurations of learning and not assume that how we do things now has any special privilege.