The Research Director for UpGuard, Chris Vickery (@VickerySec) has uncovered code repositories from AggregateIQ, the Canadian company that was building tools for/with SCL and Cambridge Analytica. See The Aggregate IQ Files, Part One: How a Political Engineering Firm Exposed Their Code Base and AggregateIQ Created Cambridge Analytica’s Election Software, and Here’s the Proof from Gizmodo.

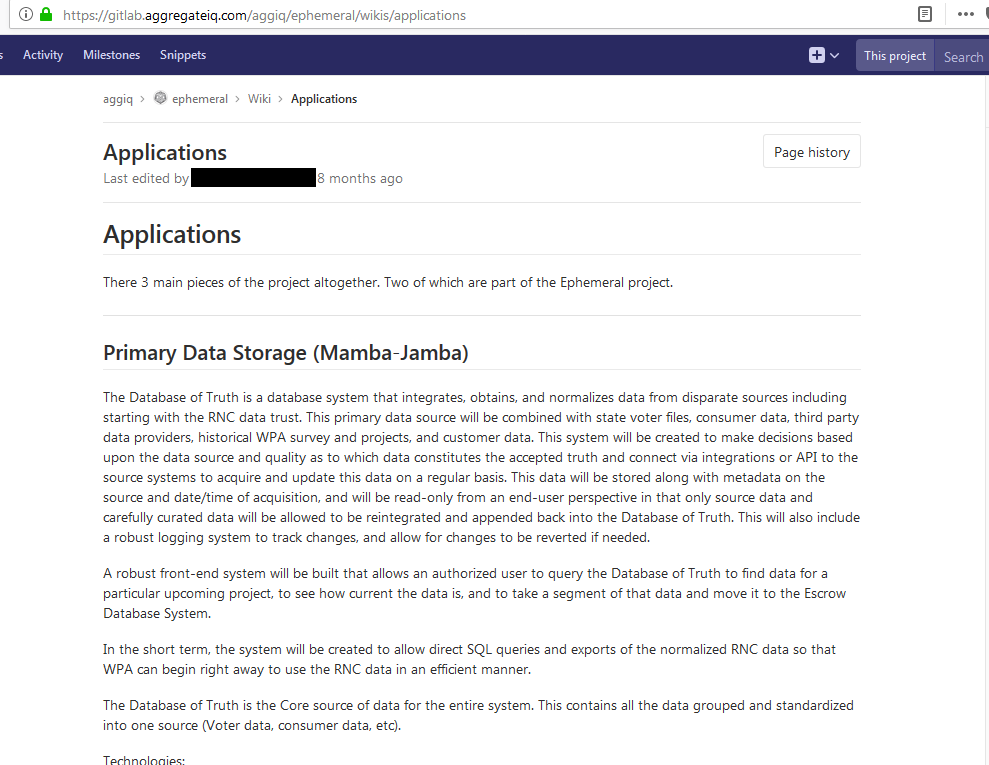

The screenshots from the repository show on project called ephemeral with a description “Because there is no such thing as THE TRUTH”. The “Primary Data Storage” of Ephemeral is called “Mamba Jamba”, presumably a joke on “mumbo jumbo” which isn’t a good sign. What is mort interesting is the description (see image above) of the data storage system as “The Database of Truth”. Here is a selection of that description:

The Database of Truth is a database system that integrates, obtains, and normalizes data from disparate sources including starting with the RNC data trust. … This system will be created to make decisions based upon the data source and quality as to which data constitutes the accepted truth and connect via integrations or API to the source systems to acquire and update this data on a regular basis.

A robust front-end system will be built that allows an authrized user to query the Database of Truth to find data for a particular upcoming project, to see how current the data is, and to take a segment of that data and move it to the Escrow Database System. …

The Database of Truth is the Core source of data for the entire system. …

One wonders if there is a philosophical theory, of sorts, in Ephemeral. A theory where no truth is built on the mumbo jumbo of a database of truth(s).

Ephemeral would seem to be part of Project Ripon, the system that Cambridge Analytica never really delivered to the Cruz campaign. Perhaps the system was so ephemeral that it never worked and therefore the Database of Truth never held THE TRUTH. Ripon might be better called Ripoff.