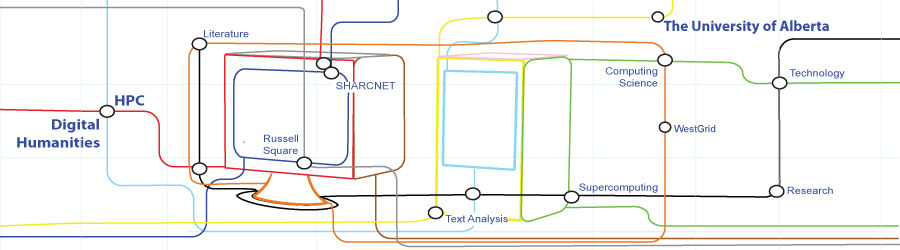

Next week we are running a workshop at the University of Alberta on the subject of bridging the digital humanities and high-performance computing (HPC). This workshop will bring together teams that will prototype ideas for HPC digital humanities projects. We will have Stephen Ramsay and Patrick Juola as guest speakers. It should be an excellent mix of formal and informal meetings. Above all, Megan Meredith-Lobary developed a neat graphic for the event. See Mind the Gap: Bridging HPC and the Humanities.

Category: High Performance Computing

Digging Into Data: New International Program

The NEH has announced a new program with SSHRC called Digging Into Data. The program is innovative in a number of ways. It is addresses the challenges of large data collections and their analysis. It is also international in that it brings together the granting councils from three countries. Here is what the program says,

The idea behind the Digging into Data Challenge is to answer the question “what do you do with a million books?” Or a million pages of newspaper? Or a million photographs of artwork? That is, how does the notion of scale affect humanities and social science research? Now that scholars have access to huge repositories of digitized data — far more than they could read in a lifetime — what does that mean for research?

Applicants will form international teams from at least two of the participating countries. Winning teams will receive grants from two or more of the funding agencies and, one year later, will be invited to show off their work at a special conference. Our hope is that these projects will serve as exemplars to the field.

This feels like a turning point in the digital humanities. Until now we have had smaller grant programs like the ITST program in Canada. This program is on a larger scale, both in terms of funding available and in terms of the challenge.

Orion: Cyberinfrastructure for the Humanities

Yesterday I gave a talk at the Orion conference Powering Research and Innovation: A National Summit on a panel on Cyberinfrastructure on “Cyberinfrastructure in the Humanities: Back to Supercomputing.” Alas Michael Macy from Cornell, who was supposed to also talk didn’t make it. (It is always more interesting to hear others than yourself.) I was asked to try to summarize the humanities needs/perspectives on cyberinfrastructure for research which I did by pointing people to the ACLS Commission on Cyberinfrastructure report “Our Cultural Commonwealth.” One of the points worth making over an over is that we have a pretty good idea now what researchers in the humanities need as a base level of infrastructure (labs, servers and support). The interesting question is how our needs are evolving and I think that is what the Bamboo project is trying to document. Another way to put it is that research computing support units need strategies for handling the evolution of cyberinfrastructure. They need ways of knowing what infrastructure should be treated like a utility (and therefore be free, always on and funded by the institution) and what infrastructure should be funded through competitions, requests or not at all. We would all love to have everything before we thought of it, but institutions can’t afford expensive stuff no one needs. My hope for Bamboo is that it will develop a baseline of what researchers can be shown to need (and use) and then develop strategies for consensually evolving that baseline in ways that help support units. High Performance Computing access is a case in point as it is very expensive and what is available is usually structured for science research. How can we explore HPC in the humanities and how would we know when it is time to provide general access?

High Performance Computing in the Arts & Humanities

Last April I helped organize a workshop on how High Performance Computing centres like SHARCNET can support the humanities. One of the things we agreed was needed was a good introduction to the intersection. John Bonnet agreed to write one and Kyle Kuchmey worked with me on examples to produce High Performance Computing in the Arts & Humanities. We hope this will be a gentle introduction to the intersection.

Supercomputing: World Community Grid

I got an announcement about a A Workshop on Humanities Applications for the World Community Grid (WCG) being hosted by IBM. The WCG is a volunteer grid that uses the BOINC platform and is “powered” by IBM. These volunteer projects fascinate me – they are not our father’s computing where the danger was computers getting smarter than us and taking over and the paradigm was AI. Now the symbiosis of humans and computing is on a social scale – grids of processors and teams of people. Here is what the WCG says about their project:

World Community Grid’s mission is to create the largest public computing grid benefiting humanity. Our work is built on the belief that technological innovation combined with visionary scientific research and large-scale volunteerism can change our world for the better. Our success depends on individuals – like you – collectively contributing their unused computer time to this not-for-profit endeavor.

What sorts of humanities problems could we run on a grid like this? Do humanities projects “benefit humanity” or is medicine (curing cancer) the last human research left? My instinct tells me we could do internet mining for concepts where we gather, clean and analyze large numbers of documents on concepts like “dialogue”. Perhaps someone wants to submit a proposal with me.

Digital Humanities And High Performance Computing

This Monday and Tuesday we had a workshop on Digital Humanities And High Performance Computing here at McMaster. The workshop that was sponsored by SHARCNET was organized to identify challenges, opportunities and concrete steps that can be taken to support humanists interested in HPC techniques. Materials for the workshop are now on my wiki philosophi.ca, but will soon migrate to SHARCNET’s.

The intersection of the humanities and HPC is heating up. We aren’t the only people developing encounters. The NEH (National Endowment for the Humanities) has a Humanities High Performance Computing Resource Page that describes grant programs for humanists. One program with the DOE gives humanists support and cycles on machines at the NERSC. The support will be crucial – one thing that came out in our workshop is that humanists need training and support to imagine HPC projects. In particular we need support to clean up our data to the point where it is tractable to HPC methods.

The workshop brought digital humanists, HPC folk, and grant council representatives from across Southern Ontario. Pictures are coming!

Update: Materials about this workshop are now on the SHARCNET DH-HPC wiki.

VersionBeta3 < Main < WikiTADA

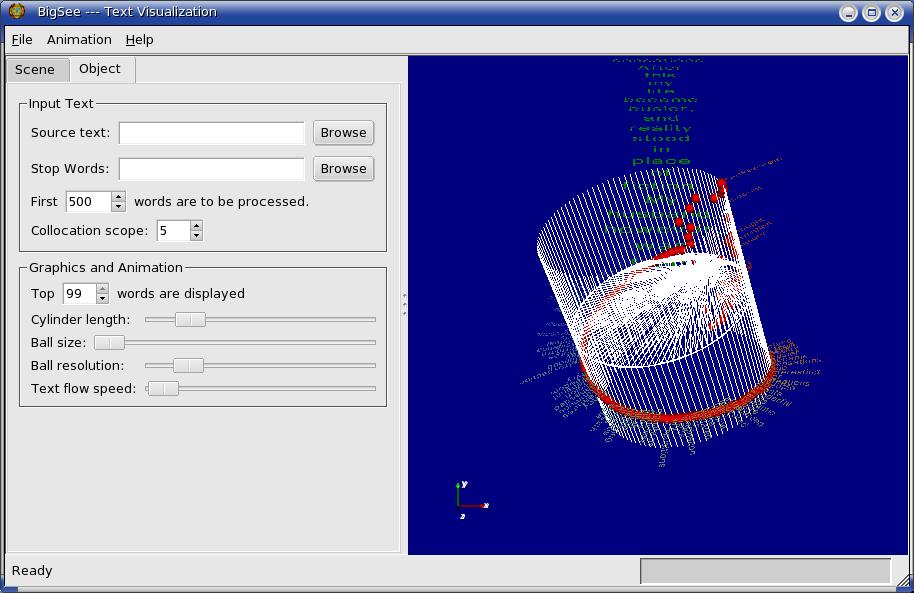

We have a new version of the Big See collocation centroid. Version Beta 3 now has a graphical user interface where you can control settings before running the animation and once the animation is run. As before we show the process of developing the 3D model as an animation. Once run you can manipulate the 3D model. If you turn on stereo you can see the text model as a 3D object if you have the right glasses on (it supports different types including red/green.)

I’m still trying to articulate the goals of the project. Like any humanities computing project the problem and solutions are emerging as we develop and debate. I now think of it as an attempt to develop a visual model of a text that can be scaled out to very high resolution displays, 3D displays, and high performance computing. The visual models we have in the humanities are primitive – the scrolling page and the distribution graph. TextArc introduced a model, the weighted centroid, that is rich and rewards exploration. I’m trying to extend that into three dimensions while weaving in the distribution graph. Think of the Big See is a barrel of distributions.

High Resolution Visualization

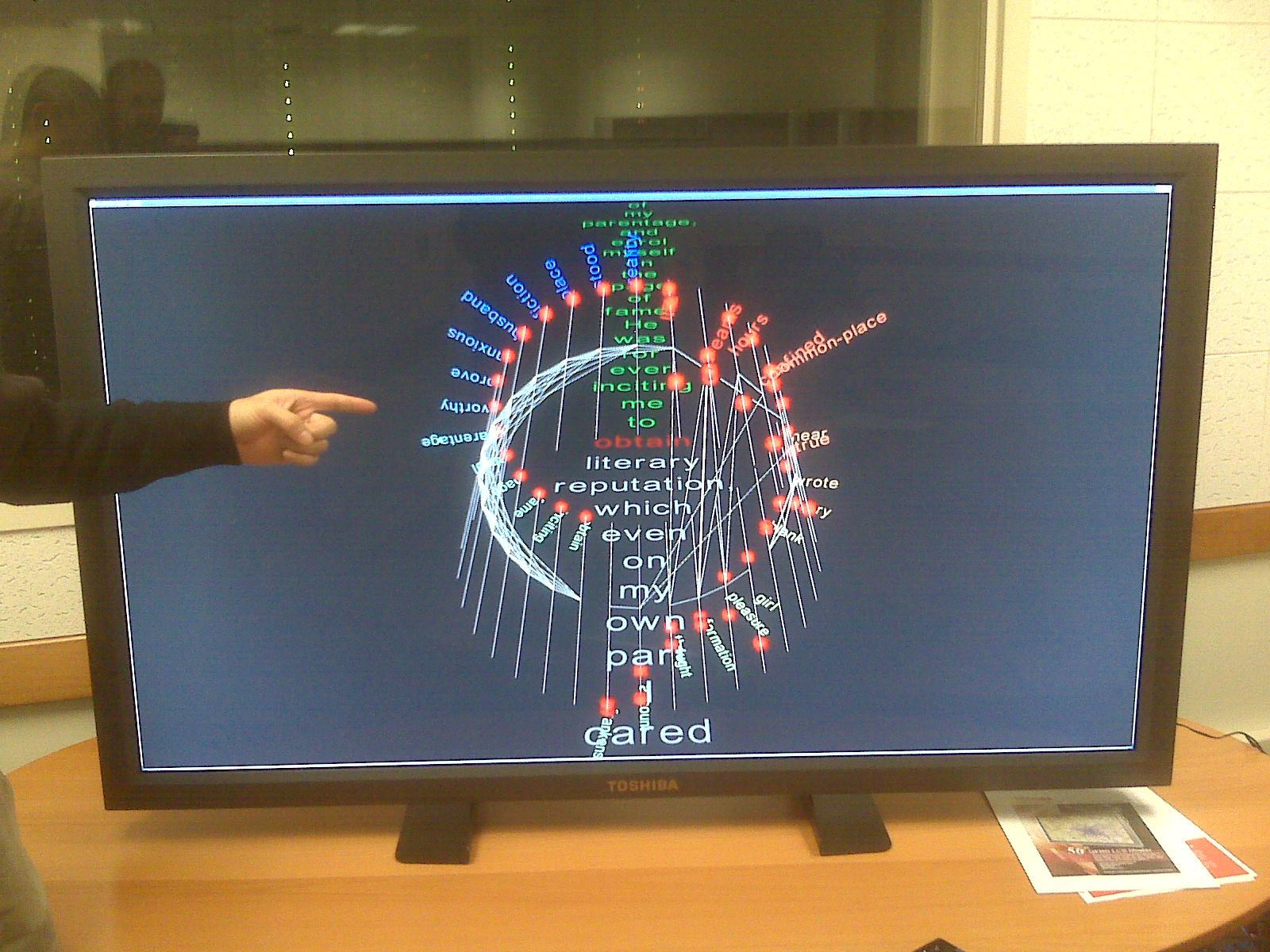

In a previous post I wrote about a High Performance Visualization project. We got the chance to try the visualization on a Toshiba high resolution monitor (something like 5000 X 2500). Above you can see a picture I took with my Blackberry.

What can we do with high resolution displays? What would we show and how could we interact with them? I take it for granted that we won’t just blow up existing visualizations.

Next Steps for E-Science and the Textual Humanities

D-Lib Magazine has a report on next steps for high performance computing (or as they call it in the UK, “e-science”) and the humanities, Next Steps for E-Science, the Textual Humanities and VREs. The report summarizes four presentations on what is next. Some quotes and reactions,

The crucial point they made was that digital libraries are far more than simple digital surrogates of existing conventional libraries. They are, or at least have the potential to be, complex Virtual Research Environments (VREs), but researchers and e-infrastructure providers in the humanities lack the resources to realize this full potential.

I would call this the cyberinfrastructure step, but I’m not sure it will be libraries that lead. Nor am I sure about the “virtual” in research environments. Space matters and real space is so much more high-bandwidth than the virtual. In fact, subsequent papers made something like this point about the shape of the environment to come.

Loretta Auvil form the NCSA is summarized to the effect that Software Environment for the Advancement of Scholarly Research (SEASR) is,

API-driven approach enables analyses run by text mining tools, such as NoraVis (http://www.noraproject.org/description.php) and Featurelens (http://www.cs.umd.edu/hcil/textvis/featurelens/) to be published to web services. This is critical: a VRE that is based on digital library infrastructure will have to include not just text, but software tools that allow users to analyse, retrieve (elements of) and search those texts in ever more sophisticated ways. This requires formal, documented and sharable workflows, and mirrors needs identified in the hard science communities, which are being met by initiatives such as the myExperiment project (http://www.myexperiment.org). A key priority of this project is to implement formal, yet sharable, workflows across different research domains.

While I agree, of course, on the need for tools, I’m not sure it follows that this “requires” us to be able to share workflows. Our data from TAPoR is that it is the simple environment, TAPoRware, that is being used most, not the portal, though simple tools may be a way in to VREs. I’m guessing that the idea of workflows is more of a hypothesis of what will enable the rapid development of domain specific research utilities (where a utility does a task of the domain, while a tool does something more primitive.) Workflows could turn out to be perceived of as domain-specific composite tools rather than flows just as most “primitive” tools have some flow within them. What may happen is that libraries and centres hire programmers to develop workflows for particular teams in consultation with researchers for specific resources, and this is the promise of SEASR. When it crosses the Rubicon of reality it will provide support units a powerful way to rapidly deploying sophisticated research environments. But if it is programmers who do this, will they want a flow model application development environment or default back to something familiar like Java. (What is the research on the success of visual programming environments?)

Boncheva is reported as presenting the Generic Architecture for Text Engineering (GATE).

A key theme of the workshop was the well documented need researchers have to be able to annotate the texts upon which they are working: this is crucial to the research process. The Semantic Annotation Factory Environment (SAFE) by GATE will help annotators, language engineers and curators to deal with the (often tedious) work of SA, as it adds information extraction tools and other means to the annotation environment that make at least parts of the annotation process work automatically. This is known as a ‘factory’, as it will not completely substitute the manual annotation process, but rather complement it with the work of robots that help with the information extraction.

The alternative to the tool model of what humanists need is the annotation environment. John Bradley has been pursuing a version of this with Pliny. It is premised on the view that humanists want to closely markup, annotate, and manipulate smaller collections of texts as they read. Tools have a place, but within a reading environment. GATE is doing something a little different – they are trying to semi-automate linguistic annotation, but their tools could be used in a more exploratory environment.

What I like about this report is we see the three complementary and achievable visions of the next steps in digital humanities:

- The development of cyberinfrastructure building on the library, but also digital humanities centres.

- The development of application development frameworks that can create domain-specific interfaces for research that takes advantage of large-scale resources.

- The development of reading and annotation tools that work with and enhance electronic texts.

I think there is fourth agenda item we need to consider, which is how we will enable reflection on and preservation of the work of the last 40 years. Willard McCarty has asked how we will write the history of humanities computing and I don’t think he means a list of people and dates. I think he means how we will develop from a start-up and unreflective culture to one that one that tries to understand itself in change. That means we need to start documenting and preserving what Julia Flanders has called the craft projects of the first generations which prepared the way for these large scale visions.