McLuhan and Woody Allen from Annie Hall

Today is the 100th anniversary of Marshall McLuhan’s birth so there are a bunch of articles about his work including this one from the Nieman Journalism Lab by Megan Garber, Webs and whirligigs: Marshall McLuhan in his time and ours. I also found an article by Paul Miller aka DJ Spooky on Dead Simple: Marshall Mcluhan and the Art of the Record which is partly about the Medium is the Massage record that McLuhan worked on with others. Right at the top you can listen to a DJ Spooky remix of McLuhan from the record.

Some students here at U of A and I have been working our way through the archives of the Globe and Mail studying how computing was presented to Canadians starting with the first articles in the 1950s. McLuhan features in a number of articles as he was eminently quotable and he was getting research funding. The best article is from May 7, 1964 (page 7) by Hugh Munro titled “Research Project with Awesome Implications.” Here are some quotes:

If successful, they said, it (the project) could produce a foolproof system for analyzing humans and manipulating their behavior, or it could give mankind a surefire method of planning the future and making a world free from large-scale social mistakes. …

They (the team of nine scientists) have undertaken to discover the impacts of culture and technology on each other, or, as Dr McLuhan put it, to discover “how the things we make change the way we live and how the way we live changes the things we make.” …

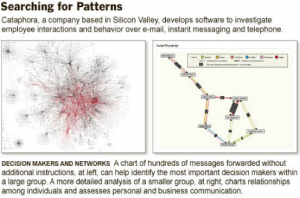

The next stage in the technological revolution that will change man’s perceptions is the computer. But it may hold the secret to the communications problem. With these electronic devices, it is possible to test all manner of things from ads to cities.

The article describes a grant (probably Canada Council but perhaps a foundation grant) that an interdisciplinary team of nine “scientists” from medicine, architecture, engineering, political science, psychiatry, museology, anthropology and English. They were going to use computers and head cameras (that track what people look at) to understand what people sense, how they are stimulated and how what they sense is conditioned by their background. “The scientists at the Centre (of Culture and Technology at U of T) believe they can define and catalogue the sensory characteristics …”

The idea is that if they can figure out how people are stimulated then they can figure out how to manipulate them either for good or bad. “Foolproof ads could be designed. ‘Madison Avenue could rule the world.’ Dr. McLuhan said. ‘The IQs of illiterate people could be raised dramatically by new educational methods.'”

Oh to be so confident about research outcomes!