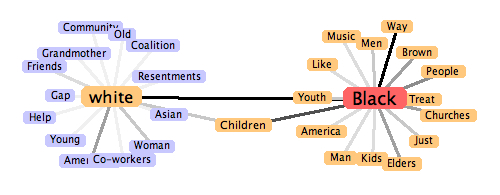

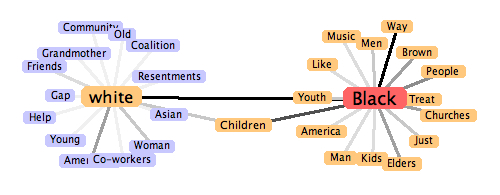

Stéfan Sinclair and I have just finished writing up an essay from an extreme text analysis session, Now, Analyze That. It is first of all a short essay comparing Obama and Wright’s recent speeches on race. The essay reports on what we found in a two day experiment using our own tools and it has interactive handles woven in that let you recapitulate our experiment.

The essay was written in order to find a way of write interpretative essays that are based on computer-assisted text analysis and exhibit their evidence appropriately without ending up being all about the tools. We are striving for a rhetoric that doesn’t hide text analysis methods and tools, but is still about interpretation. Having both taught text analysis we have both found that there are few examples of short accessible essays about something other than text analysis that still show how text analysis can help. The analysis either colonizes the interpretation or it is hidden and hard for students and others to recapitulate. Our experiments are therefore attempts to write such essays and document the process from conception (coming up with what we want to analyze) to online publication.

Doing the analysis in a pair where one of did the analysis and one documented and directed was a discovery for me. You really do learn more when you work in a pair and force yourself to take roles. I’m intrigued at how agile programming practices can be applied to humanities research.

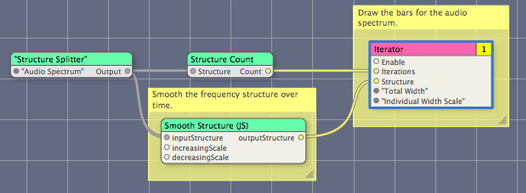

This essay comes out of our second experiment. The first wasn’t finished because we didn’t devote enough time together to it (we really need about two days and that doesn’t include writing up the essay.) There will be more experiments as the practice of working together has proven a very useful way to test the TAPoR Portal and think through how tools can support research all the way through the life of a project, from conceptualization to publication. I suspect as we try different experiments we will be changing the portal and the tools. too often tools are designed for the exploratory stage of research instead of the whole cycle right to where you write an essay.

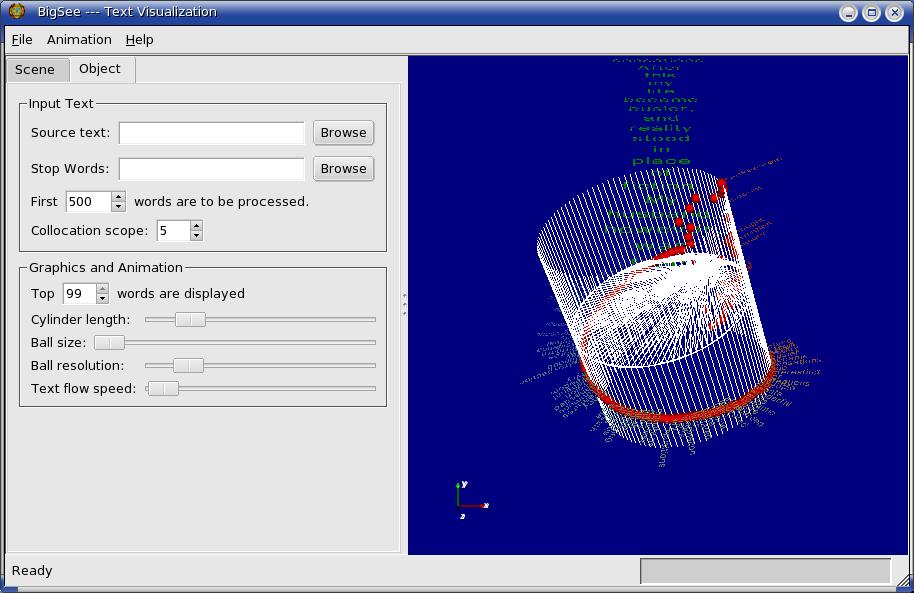

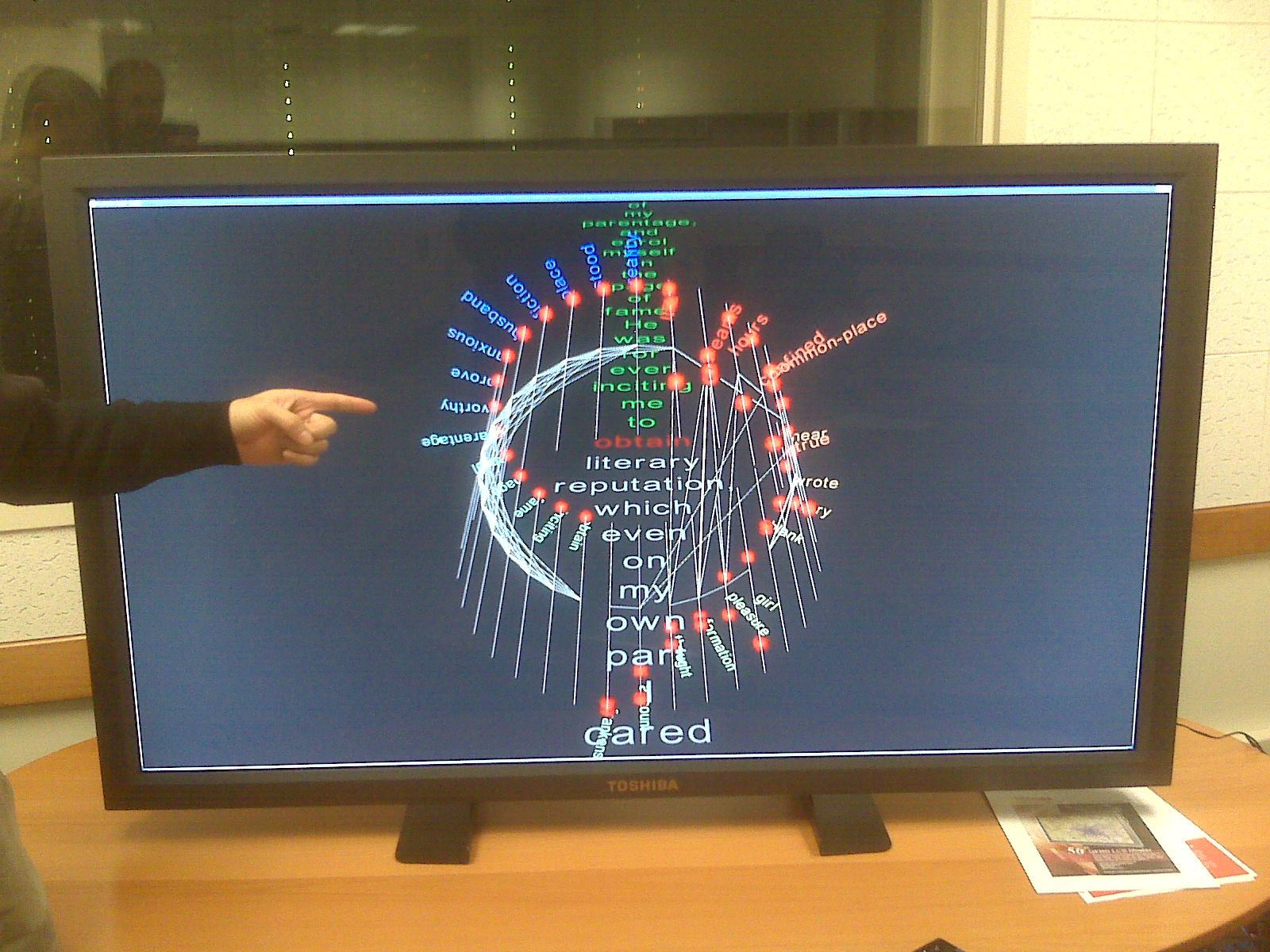

You can, of course, actually use the same tools we used on the essay itself. At the bottom of the left-hand column there is an Analysis Tool bar that gives you tools that will run on the page itself.