As A.I.-generated data becomes harder to detect, it’s increasingly likely to be ingested by future A.I., leading to worse results.

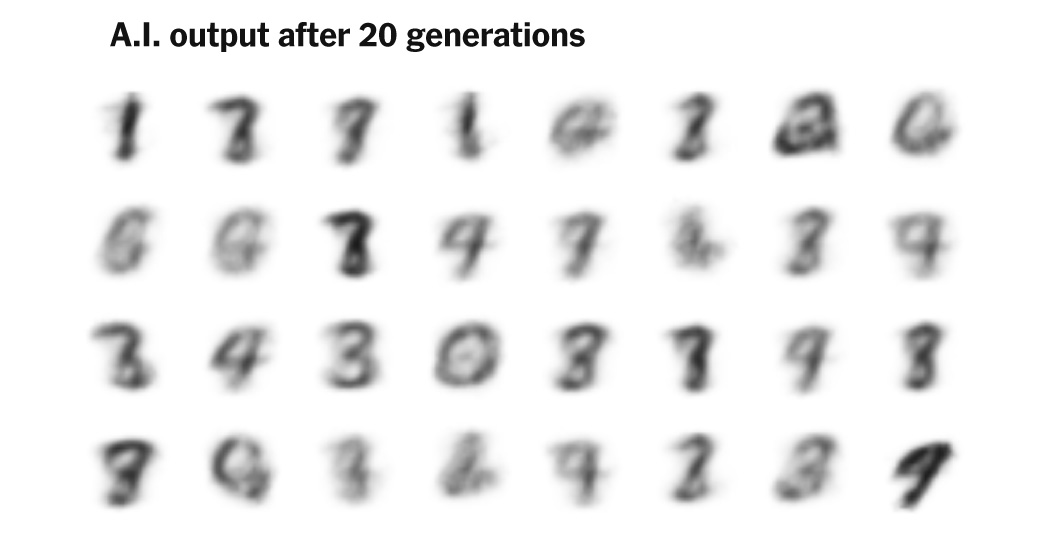

The New York Times has a terrific article on model collapse, When A.I.’s Output Is a Threat to A.I. Itself. They illustrate what happens when an AI is repeatedly trained on its own output.

Model collapse is likely to become a problem for new generative AI systems trained on the internet which, in turn, is more and more a trash can full of AI generated misinformation. That companies like OpenAI don’t seem to respect the copyright and creativity of others makes is likely that there will be less and less free human data available. (This blog may end up the last source of fresh human text 🙂

The article also has an example of how output can converge and thus lose diversity as it trained on its own output over and over.

Perhaps the biggest takeaway of this research is that high-quality, diverse data is valuable and hard for computers to emulate.

One solution, then, is for A.I. companies to pay for this data instead of scooping it up from the internet, ensuring both human origin and high quality.