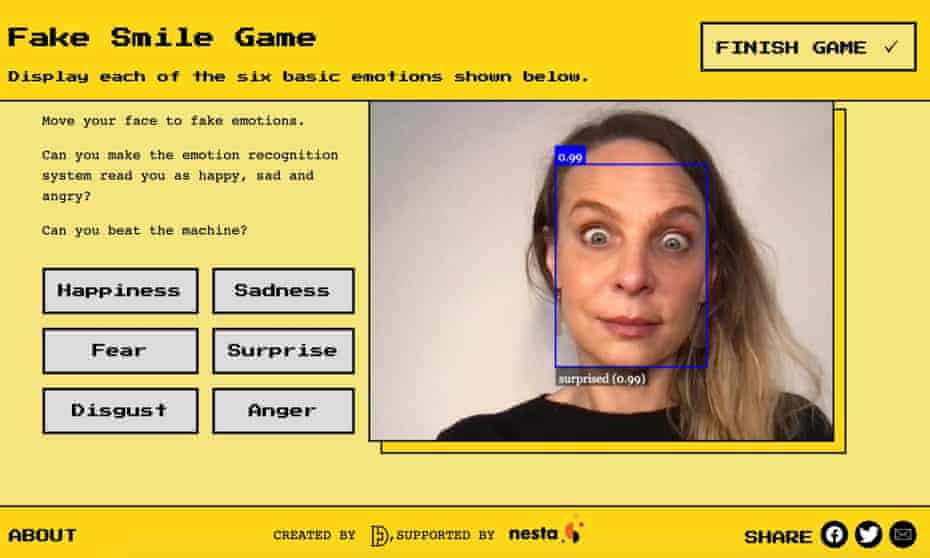

Public can try pulling faces to trick the technology, while critics highlight human rights concerns

From the Guardian story, Scientists create online games to show risks of AI emotion recognition, I discovered Emojify, a web site with some games to show how problematic emotion detection is. Researchers are worried by the booming business of emotion detection with artificial intelligence. For example, it is being used in education in China. See the CNN story about how In Hong Kong, this AI reads children’s emotions as they learn.

A Hong Kong company has developed facial expression-reading AI that monitors students’ emotions as they study. With many children currently learning from home, they say the technology could make the virtual classroom even better than the real thing.

With cameras all over, this should worry us. We are not only be identified by face recognition, but now they want to know our inner emotions too. What sort of theory of emotions licenses these systems?