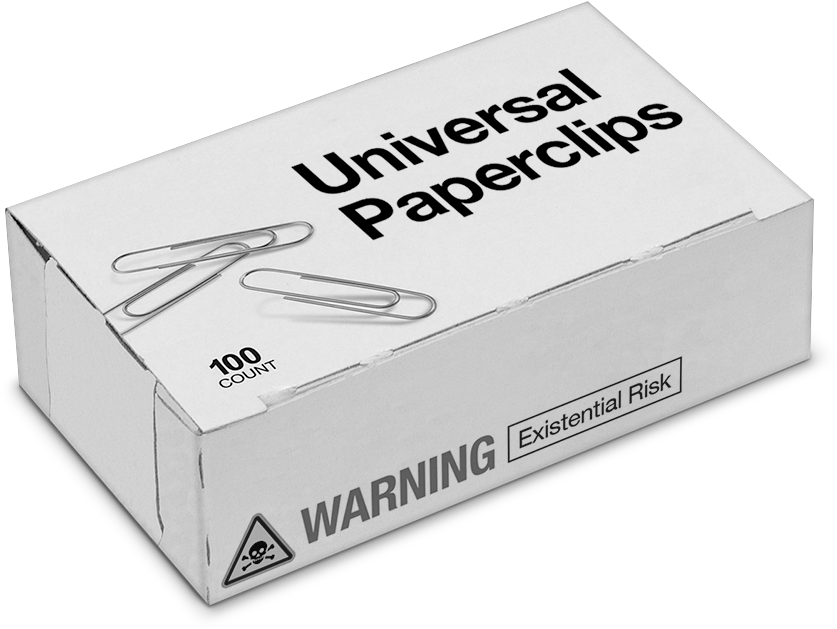

Just finished playing the Universal Paperclips game which was surprisingly fun. It took me about 3.5 hours to get to sentience. The idea of the game is that you are an AI running a paperclip company and you make decisions and investments. The game was inspired by the philosopher Nick Bostrom‘s paperclip maximizer thought experiment which shows the risk that some harmless AI that controls the making of paperclips might evolve into an AGI (Artificial General Intelligence) and pose a risk to us. It might even convert all the resources of the universe into paperclips. The original thought experiment is in Bostrom’s paper Ethical Issues in Advanced Artificial Intelligence to illustrate the point that “Artificial intellects need not have humanlike motives.”

Human are rarely willing slaves, but there is nothing implausible about the idea of a superintelligence having as its supergoal to serve humanity or some particular human, with no desire whatsoever to revolt or to “liberate” itself. It also seems perfectly possible to have a superintelligence whose sole goal is something completely arbitrary, such as to manufacture as many paperclips as possible, and who would resist with all its might any attempt to alter this goal. For better or worse, artificial intellects need not share our human motivational tendencies.

The game is rather addictive despite having a simple interface where all you can do is click on buttons making decisions. The decisions you get to make change over time and there are different panels that open up for exploration.

I learned about the game from an interesting blog entry by David Rosenthal on how It Isn’t About The Technology which is a response to enthusiasm about Web 3.0 and decentralized technologies (blockchain) and how they might save us, to which Rosenthal responds that it is isn’t about the technology.

One of the more interesting ideas that Rosenthal mentions is from Charles Stross’s keynote for the 34th Chaos Communications Congress to the effect that businesses are “slow AIs”. Corporations are machines that, like the paperclip maximizer, are self-optimizing and evolve until they are dangerous – something we are seeing with Google and Facebook.